README 这是方向臻的学习笔记 和资源存储仓库 (资源请从github下载)。

神经协同过滤 发表:17年四月,world wide web会议,深度学习的网络结构,训练方法,GPU硬件的不断进步,促使其不断征服其他领域

何向南:中科大教授,92年,28岁

点积和矩阵分解的关系:矩阵分解为两个矩阵相乘,又等价于第i行和第j列的点积

矩阵分解的限制性:Jaccard系数作为实际的结果,先计算u1,2,3,而后添加u4,发现,4和2的距离一定比4和3的距离更近

题外话:

Jaccard 主要用于判断集合间相似度,所以他无法像矩阵一样,体现更多的信息。

Cosine 的计算中,则可以把用户对电影的评分信息加进去。

目标:NCF,GMF,MLP,NeuMF

ranking loss:度量学习,相对位置,the objective of Ranking Losses is to predict relative distances between inputs . This task if often called metric learning .

解决方式:使用大量的隐藏因子去学习交互函数。

element-wise product:按元素积

将GMF作为一种特殊的NCF

如果a是恒等函数,h是1的均匀向量

经验:tower structure,halving the layer size for each successive higher layer

generalization ability:泛化能力,适应新样本的能力

神经张量网络,使用加法

神经矩阵分解,使用连结操作

显示评分:回归损失,预测一个值,平方损失

隐式交互:分类损失,预测离散结果,logistic

优化方法:随机梯度下降法

实验环境设置:

数据集,留一法,top-k排序,

HR@10:分母是所有的测试集合,分子式每个用户top-K推荐列表中属于测试集合的个数的总和

NDCG@10:最终所产生的增益(归一化折损累计增益)

BPR:基于矩阵分解的一种排序算法,针对每一个用户自己的商品喜好分贝做排序优化。在实际产品中,BPR之类的推荐排序在海量数据中选择极少量数据做推荐的时候有优势。淘宝京东有在用。部分填充,速度十分快。

eALS:最新的关于隐式数据的协同过滤算法,用一步到位的计算公式全部填充缺失值。

rel表示关联性,就是跟所想要的结果的关联度,0表示没有关联,越高说明关联性越高

i是位置,关联性乘以位置,就是第i个结果所产生的效益

IDCG是理想化的最大效益。

NeuMF,%5更优

NeuMF > GMF > MLP

理论成果

GMF: weights w can simply be absorbed into the embeddings matrices P and Q

总之,应用场景(数据集)不同,采用的方法应该不同,灵活使用推荐算法或模型

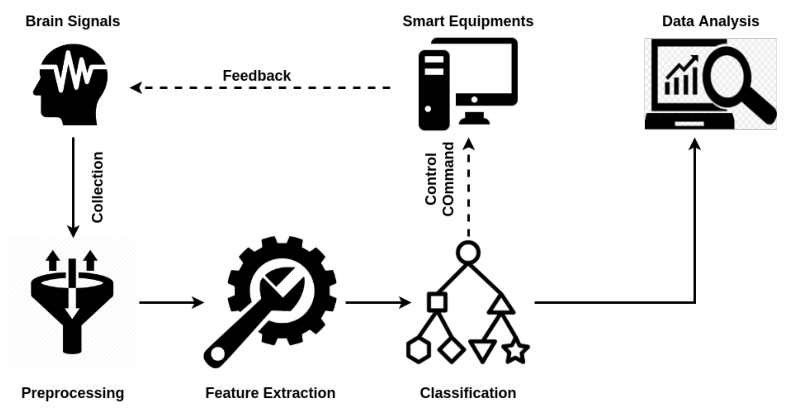

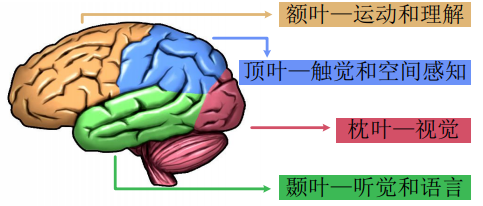

非侵入信号深度学习 工作流程包括几个关键部分:脑信号采集、信号预处理、特征提取、分类和数据分析

头骨让信号保真度为5%(以信噪比(SNR)衡量)

预处理:包含多个步骤,如信号清理(平滑噪声信号或解决不一致性)、信号标准化(沿时间轴对每个信号通道进行标准化)、信号增强(去除直流电)和信号压缩(呈现信号的简化表示)。

分类结果应用:神经疾病诊断、情绪测量和驾驶疲劳检测。

脑机接口的深度学习的分类:

传统BCI所面临的挑战:

大脑信号很容易被各种生物因素(如眨眼、肌肉伪影【肌肉产生的电波对脑电波的影响】、疲劳和注意力集中程度)和环境因素(如噪音)所破坏

深度学习好处:

深层神经网络和第二层神经网络都能捕获潜在的,具有代表性的特征。

综述论文贡献:

对非侵入性脑信号论文的全面性调查

功能性近红外光谱(functional near - infrared spectroscopy , fNIRS )【利用血液的主要成分对600-900nm近红外光良好的散射性,从而获得大脑活动时氧合血红蛋白和脱氧血红蛋白的变化情况,产生功能性神经影像】

综述论文内容:

讨论了流行的深度学习技术和最新的脑信号模型,为在给定特定信号亚类的情况下选择合适的深度学习模型提供了实用指南。

回顾了基于深度学习的脑信号分析的应用,并指出了一些有前景的未来研究课题。

基于信号收集方法的非侵入性脑信号分类(虚线不调查)

P300包含于ERP中。

ERP:一种特殊的脑诱发电位,通过有意地赋予刺激以特殊的心理意义,利用多个或多样的刺激所引起的脑的电位。它反映了认知过程中大脑的神经电生理的变化,也被称为认知电位,也就是指当人们对某课题进行认知加工时,从头颅表面记录到的脑电位。

其他脑成像技术(fNIRS,fMRI)中的视觉/听觉任务未曾有采用过深度学习,但理论上可行。

第三节,概述常用的深度学习模型

分类模型:Multi-Layer Perceptron (MLP) , Recurrent Neural Networks(RNN) , Convolutional Neural Networks (CNN) --> 特征提取和分类

表示模型:Autoencoder(AE), Restricted Boltz-mann Machine (RBM) , Deep Belief Networks(DBN) --> 只能特征提取

生成模型:Variational Autoencoder (VAE),Generative Adversarial Networks (GANs) --> 主要用于生成脑信号样本,增强训练集

各个非侵入式脑信号特征:

第四节,最先进的脑信号深度学习技术

4.1.1.1 睡眠脑电:

主要用于识别睡眠阶段、诊断睡眠障碍或培养健康习惯

睡眠阶段包括清醒、非快速眼动1、非快速眼动2、非快速眼动3、非快速眼动4和快速眼动。

美国睡眠医学学会(AASM)建议将睡眠分为五个阶段:清醒、非快速眼动1、非快速眼动2、慢波睡眠(SWS)和快速眼动。

识别睡眠阶段,一般通过滤波器实现脑电信号的预处理,数据通常是30s的窗口,50hz。

分类模型:CNN用于单通道EEG的睡眠阶段分类,86%精确度

表示模型:DBN-RBM(深度置信网络-受限玻耳兹曼机)从睡眠脑电信号中提取功率谱密度 (PSD,表示随机信号的强度),在局部数据集达到F-1值92.78%(兼顾召回率和精确度)

4.1.1.2 运动想象脑电:

深度学习在运动想象脑电图和真实运动脑电图的分类上显示出优越性

分类模型:大多使用CNN来识别脑电图,例如:

从EEG信号中学习情感信息,构建改进的LSTM控制智能家电

4.1.1.3 情绪脑电图

个体的情绪可以从三个方面来评价:评价值(积极感情的值)、唤起度(激动的程度)和控制力 。

这三个方面的结合形成了恐惧、悲伤和愤怒等情绪,这些情绪可以通过脑电图信号来揭示。

分类模型:传统上使用MLP,CNN和RNN正在越来越流行

通过多通道脑电信号转化为二维矩阵来捕捉通道之间的空间相关性

4.1.1.4 精神病脑电图

大量研究人员利用脑电图信号诊断神经系统疾病,特别是癫痫发作

分类模型:CNN广泛应用于癫痫发作的自动检测

CNN对癫痫发作的高通量(1hz)EEG信号进行研究,获得了94.7%的AUC

在抑郁症检测上采用了13层CNN模型,在30名受试者的局部数据集上进行了评估,基于左半球和右半球EEG信号的准确率分别为93.5%和96.0%

4.1.1.5 数据增强

实验1:EEG信号转换为图像

首先证明了脑电波中包含的信息被赋予了区分视觉对象的能力

然后使用RNN提取了更健壮、更具区分性的脑电数据表示。

最后,利用GAN范式训练了一个由学习的EEG表示调节的图像生成器,该生成器可以将EEG信号转换为图像

实验2:将EEG信号转换为图像

当受试者观察屏幕上的图像时,采集脑电图信号。将脑电信号的潜在结构作为输入,提取脑电信号的潜在特征。

GAN的产生器和鉴别器均由卷积层构成。该发生器根据训练后的脑电信号生成图像。

实验3:癫痫发作数据增强的GAN(生成式对抗网络)

作者证明了GAN优于其他生成模型,如AE和VAE(可变自动编码器)。增强后,分类准确率从48%提高到82%。

4.1.1.6 其他

实验1:听觉/视觉刺激(持续存在的刺激)如何影响脑电图信号

13名受试者受到23种节律性刺激的刺激,其中包括12种东非和12种西方刺激。

对于24类分类,提出的CNN平均准确率为24.4%。

之后,作者利用卷积AE进行表征学习,CNN用于识别,12类分类的准确率达到27%

实验2:区分是在听歌还是想象歌曲

提出两个深度学习模型,使用二值分类任务,所提出的CNN和DBN-RBM(三个RBM)的准确率分别为91.63%和91.75%。

实验3:自发脑电图可以用来区分使用者的心理状态(逻辑与情绪)

实验4:认知负荷(处理具体任务时加在学习者认知系统上的负荷)或体力负荷对EEG的影响

实验5:在不同心理负荷下,受试者之间及受试者本身中的一般特征是恒定的。

脑电信号经低通滤波器滤波后,转换到频域,计算功率谱密度(PSD)。

提取的PSD特征被输入到去噪D-AE结构中,以便于进一步的细化。最终得到了95.48%的准确率。

实验6:驾驶员疲劳检测 --- 三维CNN

实验7:驾驶员疲劳检测 --- ICA+DBN-RBM

达到85%左右准确率,二分类(“昏昏欲睡”或“警惕”)。

实验8:驾驶员疲劳值检测 --- DBM-RBM+SVM,精度达到73.29%

实验9:调查了不同低负荷水平下驾驶员的心理状态。提出了一种基于脑电信号直接检测驾驶负荷的CNN方法。

实验10:基于EEG信号的眼睛状态(闭或开)的检测

三个RBM的DBN-RBM和三个AEs的DBN-AE,98.9%的高准确率

事件相关去同步(ERD)表示正在进行的EEG信号的功率下降,

事件相关同步(ERS)表示EEG信号的功率增加

实验11:采用CNN在观看特定视频时通过脑电图检测学校欺凌行为。

实验12:结合RNN和CNN提出了一个级联框架来预测个体的情感水平和个人因素(五大人格特征、情绪和社会背景)。

实验13:试图根据使用者的脑电图信号来识别他们的性别

采用标准的CNN算法,在局部数据集上实现了81%的二元分类精度

实验14:驾驶员的脑电图信号可以区分刹车意图和正常驾驶状态

**实验15:**将大脑信号和推荐系统结合起来,通过EEG信号预测用户的偏好。

共有16名受试者接受了60个手镯状物体作为旋转视觉刺激物(3D物体)时采集脑电信号的实验。

然后采用MLP预测用户喜欢或不喜欢。本次勘探的预测精度为63.99%。

**实验16:**试图探索一个可用于各种脑信号范式的共同框架,并评估鲁棒性。基于compact CNN的EEGNet [73]

4.1.2.1 ERP事件相关电位

在大多数情况下,ERP信号都是通过P300现象来分析的。

4.1.2.1.i VEP视觉诱发电位

较热门。

实验1:通过深度学习提取具有代表性的特征来研究运动开始的 VEP(mVEP)

压缩后的信号被发送到DBN-RBM算法中,以获取更抽象的高层特征。

实验2:P300信号特征提取

通过带通滤波器(2∼35hz)过滤视觉刺激的P300信号,

该模型包括一个2D CNN来捕获空间特征,然后在LSTM层中进行时间特征提取。

实验3:使用AE模型进行特征提取,然后使用支持向量机分类器。

实验中,每一段包含150个点,分为五个时间步,每一步有30个点。

实验4:DBN-RBM代表性模型与支持向量机分类器相结合进行隐藏信息测试(??),97.3%准确率

实验5:提高P300写字机准确率

一种基于CNN的新模型,该模型包括5个具有不同特征集的低层CNN分类器

第三届BCI竞赛数据集II中,最高准确率达到95.5%

4.1.2.1.ii AEP听觉诱发电位

较少研究。

实验1:提出并测试了18个CNN结构来对单次试验的AEP信号进行分类。

实验分析表明,无论卷积层数多少,CNN框架都能有效地提取时空特征。

4.1.2.1.iii RSVP快速连续视觉表示

CNN和MLP在这里取得一定成功。

实验1:一种针对RSVP的主题间和任务间检测的CNN模型。

实验结果表明,CNN在交叉任务中表现良好,但在跨主题情境下表现不佳。

实验2:比较了三种不同的深度神经网络算法,以预测受试者是否看到了目标。

MLP、CNN和DBN模型的AUC分别为81.7%、79.6%和81.6%。

...

4.1.2.2 SSEP稳态诱发电位

大多数研究稳态视觉诱发电位(SSVEP),指由闪烁的视觉刺激引起的脑震荡,通常产生于顶叶和枕叶。当施加一个恒定频率的外界视觉刺激时,与刺激频率或谐波频率相一致的神经网络就会产生谐振,导致大脑的电位活动在刺激频率或谐波频率处出现明显变化,由此产生SSVEP信号。

实验1:寻找SSVEP的中间表现形式。

提出了一种结合CNN和RNN的混合方法,直接从时域中提取有意义的特征,准确率达到93.59%。

实验2:紧凑CNN直接处理原始结果

实验3:采用了一种典型的稀疏AE模型,从多频视觉刺激中提取SSVEP的不同特征。

该模型采用了一个softmax层进行最终分类,准确率为97.78%。

...

4.2 fNIRS功能性近红外光谱

较少研究。

定义:利用血液的主要成分对600-900nm近红外光良好的散射性,从而获得大脑活动时氧合血红蛋白和脱氧血红蛋白的变化情况,产生功能性神经影像。

实验1:基于fNIRS信号分析了两种心理任务(心算和休息)之间的差异。

从前额叶皮层fNIRS中手动提取了6个特征,并比较了6个不同的分类器。

结果表明,MLP的准确率为96.3%,优于所有传统的分类器,包括SVM、KNN、naivebayes等。

实验2:试图通过fNIRS信号检测受试者的性别。

作者使用三层隐层去噪D-AE来提取显著特征并输入MLP分类器进行性别检测。

该模型在本地数据集上进行了评估,平均准确率为81%

相比fMRI信号,fNIRS具有更高的时间分辨率和更经济的价格

4.3 fMRI功能性磁共振成像

利用磁振造影来测量神经元活动所引发的血液变化。从而监测大脑活动

该领域,近年用了不少深度学习方法,特别是认知功能障碍的诊断上。

分类模型中,CNN是一种很有前途的fMRI分析模型

实验1:根据功能磁共振成像(fMRI)和核磁共振成像(MRI)数据,应用深层CNN识别阿尔茨海默病。

实验2:利用一种新的CNN算法建立了一种基于fMRI的脑肿瘤分割方法,它可以同时捕获全局特征和局部特征

实验3:采用CNN模型处理脑瘤患者的功能磁共振成像(fMRI)进行三类识别(正常、水肿或活动性肿瘤)。在BRATS数据集上对模型进行了评估,得到了88%的F1分数

实验4:利用CNN进行特征提取。提取的特征用支持向量机分类,用于癫痫发作的检测

大量文章证明了表示模型在识别功能磁共振成像数据方面的有效性。

实验1:利用一个由三个RBM分量组成的DBN-RBM从ICA处理的fMRI中提取显著特征,最终在四个公共数据集上实现了90%以上的F1平均测量值。

实验2:DBN-RBM和DBN-AE检测阿尔茨海默病

实验3:应用D-AE模型从静止状态的fMRI数据中提取潜在特征,用于诊断轻度认知功能障碍(MCI)。-

将潜在特征输入支持向量机分类器,识别率达到72.58%。

自然图像的重建引起广泛的关注。

实验1:从fMRI中重建视觉刺激的深卷积GAN,

发生器包含四个卷积层,以便将输入的fMRI转换为自然图像。

用于测量由大脑中神经元的电活动引起的磁场。通过磁变化反映大脑活动

实验1:致力于通过去除诸如眨眼和心脏活动等伪影来细化MEG信号。

最后,该方法在局部数据集上的灵敏度达到85%,特异性达到97%。

实验2:目标同实验1

基于深度学习的大脑信号系统主要用于检测和诊断精神疾病,如睡眠障碍、阿尔茨海默病、癫痫发作等。

睡眠障碍:

对于睡眠障碍的检测,大多数研究都集中在基于睡眠自发脑电图的睡眠阶段检测上。DBN-RBM和CNN被广泛应用于特征选择和分类。

阿尔茨海默病:

功能磁共振成像在阿尔茨海默病的诊断中有着广泛的应用。优点是高空间分辨率,几项研究的诊断准确率均在90%以上。

癫痫:

癫痫发作的检测主要基于自发脑电图。流行的深度学习模型包括独立的CNN和RNN,以及结合RNN和CNN的混合模型

例如,将D-AE应用于特征提取,然后将支持向量机应用于癫痫诊断

研究人员已经证明了深度学习模型在检测大量精神疾病方面的有效性,如抑郁症[113]、发作间期癫痫放电(IED)[230]、精神分裂症[211]、克雅氏病(CJD)[123]和轻度认知障碍(MCI)

随着物联网的发展,越来越多的智能环境可以连接到大脑信号。

例如,辅助机器人可用于智能家居,其中机器人可以由个体的大脑信号控制。

基于视觉刺激的自发EEG和fNIRS信号的机器人控制问题。

P300 speller,深度学习模型使大脑信号系统能够从非300片段中识别出P300片段

使用一种结合RNN、CNN和AE的混合模型,从MI-EEG中提取信息特征来识别用户想要说的字母。

应用于身份识别和身份验证

前者通过多类别分类来识别一个人的身份[6]。后者进行二元分类来决定一个人是否被授权

个性化信息(如多媒体内容)检索或智能人机界面设计

试图根据脑电图信号,使用深度学习算法(如CNN及其变体)将用户的情绪状态分为两类(积极/消极)或三类(积极、中性和消极)

DBN-RBM是从情绪自发脑电图中发现隐藏特征的最具代表性的深度学习模型

一般情况下,如果驾驶员的反应时间小于0.7秒,则认为驾驶员处于警戒状态;如果反应时间大于2.1秒,则认为驾驶员处于疲劳状态。

目前,基于EEG的驾驶困倦可以得到较高的识别率(82%∼95%)

适当的心理负荷对于维持人类健康和预防事故是必不可少的。

持续脑电来评估操作者的心理负荷,以警告随着时间,操作者的性能下降。

通过一个循环卷积框架研究了跨多个心理任务的心理负荷测量。该模型同时从空间、频谱和时间维度学习脑电特征,二值分类(高/低负荷水平)的准确率为88.9%

推荐系统,紧急刹车,视觉对象识别,内疚测试,隐藏信息测试,区分性别。

https://en.wikipedia.org/wiki/Self-driving_car https://physionet.org/physiobank/database/sleep-edfx/ https://massdb.herokuapp.com/en/ https://physionet.org/pn3/shhpsgdb/ https://physionet.org/pn6/chbmit/ https://www.isip.piconepress.com/projects/tuh.eeg/html/downloads.shtml https://physionet.org/pn4/eegmmidb/ http://www.bbci.de/competition/ii/ http://www.eecs.qmul.ac.uk/mmv/datasets/amigos/readme.html http://bcmi.sjtu.edu.cn/seed/download.html https://www.eecs.qmul.ac.uk/mmv/datasets/deap/ https://owenlab.uwo.ca/research/the.openmiir.dataset.html http://adni.loni.usc.edu/data-samples/access-data/ https://www.med.upenn.edu/sbia/brats2018/data.html

第六节,分析和指南,根据大脑信号选择适当的模型

70%的EEG论文关注自发EEG(133种出版物)。自发的脑电图分成几个方面:睡眠、运动想象、情绪、精神疾病、数据增强和其他 。

睡眠:总共19篇,6篇使用CNN,2篇RNN,还有3种RNN+CNN的混合模型。

运动想象:广泛使用CNN和基于CNN的混合模型。表示型模型常用DBN-RBN提取潜在特征。

情绪:总共25篇,超过一半使用表示模型(D-AE,D-RBM,DBN-RBM)。最典型的状态识别工作将用户的情绪识别为积极、中立或消极。进一步对配价和唤起率进行分类

精神疾病:大部分相关研究集中在癫痫发作和阿尔茨海默病的检测上。大部分相关研究集中在癫痫发作和阿尔茨海默病的检测上。许多研究可以达到90%以上的高准确率。在这一领域,标准的CNN模型和D-AE是普遍存在的。一个可能的原因是CNN和AE是最著名和最有效的深度学习模型的分类和降维

数据增强:基于GAN的数据扩充

其他:大约有30个研究正在调查其他自发脑电图,如驾驶疲劳、视听刺激冲击、认知/心理负荷和眼睛状态检测。这些研究广泛应用标准CNN模型和变体。

视觉诱发电位(VEP)引起大量研究(21篇)。6种混合模型。

快速连续视觉表示(RSVP),只有CNN算法。

fNIRS图像的研究很少采用深度学习的方法,主要的研究只是采用简单的MLP模型。我们认为,由于fNIRS具有高便携性和低成本的特点,应引起更多的关注。

至于功能磁共振成像,有23篇论文提出了深度学习的分类模型。CNN模型因其在图像特征学习中的突出表现而被广泛应用。

6.2 深度学习模式的选择标准

结论1:大多数采用判别模型。

结论2:超过70%的判别性模型都采用了CNN及其变体,为此我们提供了以下原因:

首先,CNN的设计足够强大,能够从EEG信号中提取潜在的鉴别特征和空间相关性 进行分类。因此,有些研究采用CNN结构进行分类,而有些研究则采用CNN结构进行特征提取。

CNN在一些研究领域(如计算机视觉)取得了巨大的成功,更容易找到代码。

一些脑信号图(如功能磁共振成像)是自然形成的二维图像,有利于CNN进行处理。

结论3:表示模型中,DBN,尤其是DBN-RBM是最常用的特征提取模型。原因有二:

【大多数采用DBN-RBM模型的作品都是在2016年之前出版的。可以推断,在2016年之前,研究人员更倾向于使用DBN进行特征学习,然后使用非深度学习分类器;但最近,越来越多的研究希望采用CNN或混合模型进行特征学习和分类。】

结论4:生成模型很少独立使用,基于GAN和VAE的数据增强和图像重建主要集中在fMRI和EEG信号上,有前途。

结论5:53篇论文中,RNN和CNN的组合约占五分之一,结合后具有很好的时空特征提取能力。

结论6:表示模型+判别模型也很常用,28篇中有这种方法,所采用的表示模型多为AE或DBN-RBM,同时所采用的判别模型多为CNN。

将脑信号分析应用于医疗领域是目前最吸引人和最热门的领域。

一般来说,大多数深度学习算法在多个睡眠阶段场景下都能达到85%以上的准确率。

对于fMRI图像,CNN在网格化空间信息学习方面具有很大的优势,使其获得了非常全面的分类准确率(90%以上)。

至于癫痫发作,一般是根据脑电图信号进行诊断。单一的RNN分类器(如LSTM或GRU)由于其良好的时间依赖性表示能力,似乎比其对应的分类器工作得更好。

检测阿尔茨海默病的一个关键方法是通过测量大脑特定区域的功能来分析大脑信号。具体来说,可以通过自发的脑电图信号或功能磁共振成像来进行诊断

由于视觉诱发电位明显且易于检测,许多研究都集中在VEP信号上。一个重要的数据来源是来自第三届BCI竞赛。

脑电信号具有较高的时间分辨率,能够捕捉快速变化的情绪。因此,几乎所有的研究都是基于自发的脑电信号。这些信号是在被试观看视频时收集的,视频被认为是激发受试者特定情绪的。主要是使用层次化CNN,DBN-RBM结合强分类器,前一种更好。

一般框架需要两个关键能力:注意机制和捕捉潜在特征的能力。前者保证了框架能够集中于输入信号中最有价值的部分,而后者使框架能够捕捉到与众不同和信息丰富的特征。

方法2:CNN是最适合捕捉不同层次和范围特征的结构。未来,CNN可以作为一种基本的特征学习工具,并与适当的注意机制相结合,形成一个通用的分类框架。

方法1:可以考虑如何解释由深层神经网络导出的特征表示,学习的特征与任务相关的神经模式或精神障碍的神经病理学之间的内在关系。

方法1:实现这一目标的一个可能的解决方案是建立一个个性化的迁移学习模型 。

个性化情感模型可以采用转换参数传递的方法来构造个体分类器,并学习映射数据分布和分类器参数之间关系的回归函数

方法2:从输入数据中挖掘与主题无关的组件。输入的数据可以分解为两部分:一个依赖于主题的主题相关组件和一个所有主题都通用的主题无关组件。一个混合多任务模型可以同时处理两个任务,一个侧重于人的识别,另一个侧重于类识别。在类识别任务中,需要一个训练良好、收敛良好的模型来提取与主题无关的特征。

Adversarial V ariational Embedding (对抗性变异嵌入)-----> 高质量生成模型

有两种方法可以增强无监督学习:

一种是利用众包(给大众志愿者)方法对未标记的观测值进行标记;

另一种是利用无监督域自适应学习,通过线性变换来调整源脑信号和目标信号的分布

在真实场景中,大脑信号系统需要接收实时的数据流并实时产生分类结果

由于受试者注意力不集中和设备固有的不稳定性(例如采样率波动)等诸多因素,采集到的实时信号更具噪声和不稳定性。通过我们的实验,在线脑信号系统的准确率通常比同类系统低10%。

方法:投票和聚合来平均多个连续样本的结果,提高解码性能。

脑电采集设备主要有三种:不便携头戴式、便携式头戴式和耳式脑电传感器。

第一种,采样频率高,信道数多,信号质量高,但价格昂贵。适合医院体检。

第二种,(例如Neurosky、Emotiv EPOC),有1∼14个通道和128∼256采样率,但读数不准确,长期使用后会造成不适。

第三种,还在实验室阶段。EEGrids是唯一商业化的耳脑电设备。

受限玻尔兹曼机 RBN (2000年后流行)

RBM可以看做是一个编码解码的过程,从可见层到隐藏层就是编码,反之是解码。对于每个训练样本, 期望编码解码后的可见层输出和之前可见层输入的差距尽量的小。

RBM详细推导过程:https://www.cnblogs.com/pinard/p/6530523.html

深度波尔茨曼机Deep Boltzmann Machine (DBM)

RBN实现的深度置信网络 (DBN-RBN)

与DBM的区别是隐藏层之间为单向的。优化计算更简单。

降噪自编码器(D-AE)

DAE(Denoising Autoencoder)的核心思想是,一个能够从中恢复出原始信号的神经网络表达未必是最好的,能够对“损坏”的原始数据编码、解码,然后还能恢复真正的原始数据,这样的特征才是好的。

卷积神经网络(CNN)

循环神经网络(RNN)

长短期记忆(LSTM)

评价标准

一种JSSP的DRL环境 作者使用单智能体和多智能体来划分目前DRL方法的两大类

多智能体:2018 DQN,2020 DDPG效果更好

单智能体:2020 GNN+MLP,2020 CNN(处理时间,时间表,机器利用率)+DRL,2019 DQN+Rule

使用标准的JSSP文件(txt)方式读写,样例可看困惑点2

一个二维数组solution,若是-1表示没排,否则为开始运行的时间步

离散集合={Job是否加工-J$_i$,空闲状态},机器选择行为,行为对应作业和空闲

因此,对于每个Job而言,需要完成|M|个行为(机器的数量)。每个行为表示Job在每台机器上的加工时间,如果不需要在该机器上执行,可以当作一个0?

对于每个机器而言,需要运行至少|J|个与job有关的行为,加上若干个no-op(空闲)操作。

该函数相对于makespan能得到更紧密的解。

不用执行到最后一步,就能获得一个局部reward。

makespan可以看成是全局p+empty,而这里是局部的p-empty,那么改变之处在于系数-1和全局变为局部。

作者新建自己环境的代码十分受用,让我对新建gym的环境有了一个详细的了解

wandb和ray两个库的使用具有一定的启发,但可能更适合较大的项目

第四页中的问题对称性,讲述了一些JSS问题的对称性,通过破坏对称性,而降低搜索解空间的大小

同一时间步长中,操作具有对称性,也就是操作的执行顺序待定。怎么解决?以及为什么说直接赋予机器从小到大的索引会导致失去全局视图。

同一机器中,"运行"操作和"无运行"操作具有对称性,也就是op和no-op两者的执行顺序待定。解决方案:当no-op时,其他的操作临时设未不可执行。以及通过非最终状态优先调度的规则。

复现代码时候遇到的问题(已解决,记录):

'env': 'JSSEnv:jss-v1',该环境已经创建好并上传gym库中,作者使用的ray库会自动生成一个包含该环境名字的本地日志。而windows文件不允许冒号,所以需要修改ray库的底层代码中的日志文件名字生成方式。

大体分三块

强化学习环境:已经注册成gym库的环境,各种强化学习包都可以用。

CP.py:是使用OR tools的求解器,进行求解的方法,能达到比较好的解。

mian.py:使用ray中的PPO算法,只修改了一个全连接层,然后定义了一些参数,就可以使用作者的环境来训练。

其他读后感主要写在代码注释中,目前均已跑通。

y u i = { 1 , i f i n t e r a c t i o n i s o b s e r v e d ; 0 , e l s e y_{ui}= \begin{cases} 1,\ if\ interaction\ is\ observed;\\0,\ else \end{cases} y u i = { 1 , i f in t er a c t i o n i s o b ser v e d ; 0 , e l se Scheduling 用于发布个人阅读深度强化学习+Scheduling领域的读后感

Pytorch 目前根据官网教程和《动手学习深度学习》pytorch版学习

Deep Learning 主要根据《神经网络与深度学习》书籍进行读书笔记,其中部分截图上传到github图床,需要翻墙才能正常阅读.

1.2自动梯度

复制 # 设置跟踪向量

x = torch.ones(2, 2, requires_grad=True)

print(x)

复制 tensor([[1., 1.],

[1., 1.]], requires_grad=True)

复制 tensor([[3., 3.],

[3., 3.]], grad_fn=<AddBackward0>)

复制 Help on AddBackward0 object:

class AddBackward0(object)

| Methods defined here:

|

| __call__(self, /, *args, **kwargs)

| Call self as a function.

|

| name(...)

|

| register_hook(...)

|

| ----------------------------------------------------------------------

| Data descriptors defined here:

|

| metadata

|

| next_functions

|

| requires_grad

复制 z = y * y *3 # z = (x+2)^2 *3

out = z.mean() # out = (x+2)^2 *3/4

z, out

复制 (tensor([[27., 27.],

[27., 27.]], grad_fn=<MulBackward0>),

tensor(27., grad_fn=<MeanBackward0>))

复制 a = torch.randn(2, 2)

a = ((a*3)/ (a-1))

# 默认为Flase

print(a.requires_grad)

# 设置梯度为True

a.requires_grad_(True)

print(a.requires_grad)

b = (a*a).sum()

print(b.grad_fn)

复制 False

True

<SumBackward0 object at 0x7fd230a89d90>

复制 # 求出out对x在x=1的偏导数

out.backward()

x.grad

复制 tensor([[4.5000, 4.5000],

[4.5000, 4.5000]])

复制 x = torch.randn(3 , requires_grad=True)

y = x *2

# norm是求L2范数,平方之和而后开方

while y.data.norm() < 1000:

y = y *2

print(y)

复制 tensor([ 277.2757, 3.3198, -986.0908], grad_fn=<MulBackward0>)

复制 v = torch.tensor([0.1, 1.0, 1.0], dtype=torch.float)

# 将向量v传递给backward

y.backward(v)

# 计算向量-雅可比积,也就是在x=v的时候的梯度

x.grad

# 下面表示2^11次方

复制 tensor([ 204.8000, 2048.0000, 2048.0000])

复制 print(x.requires_grad)

print((x ** 2).requires_grad)

# 取消求梯度

with torch.no_grad():

print((x ** 2).requires_grad)

复制 print(x.requires_grad)

# 取消求梯度

y = x.detach()

print(y.requires_grad)

print(x.eq(y))

复制 True

False

tensor([True, True, True]) 大多基于静态数据上,无法对快速变化的大脑信号进行准确分类【最先进的多类别运动图像脑电图分类准确里低于80%】

功能性磁共振成像(FMRI,functional magnetic resonance imaging)【是一种新兴的神经影像学方式,其原理是利用磁振造影来测量神经元活动所引发的血液变化。从而监测大脑活动】

脑磁图(Magneto Encephalo Graphy,MEG)【是一种非侵入性技术,用于测量由大脑中神经元的电活动引起的磁场。通过磁变化反映大脑活动】

CNN+MLP来预测睡眠阶段

MLP接收经短时傅立叶变换(STFT)处理的0.5~32hz的频谱信号。

CNN+LSTM的睡眠阶段自动评分模型、

前者用于发现”time-invariant dependencies“

先用CNN捕获潜在的连续特征,然后应用弱分类器选择重要特征进行最终分类

研究CNN如何通过MI EEG样本序列来表示光谱特征

另外,还有MLP分类(前期对EEG相位【波峰,波谷】特征敏感,后期对EEG幅度特征敏感)

表示模型:DBN具有很高的代表性,被广泛应用,例如:

利用离散小波变换对脑电信号进行处理,然后应用基于声发射去噪的DBN-AE

遗传算法(用于超参数调整)和MLP(用于分类)的组合

混合模型:

利用CNN从EEG信号的时间、频域和位置信息中提取高级表示,然后使用一个包含7个AEs的DBN-AE作为分类器

使用去噪AE进行降维,将multi-view CNN与RNN相结合,在公共数据集上实现了对潜在时空信息的发现,平均准确率为72.22%。

时空递归神经网络:利用多向RNN层来发现长距离上下文线索,使用双向RNN层来捕获先前空间RNN产生的序列特征

表示模型:DBN-RBN因为情感识别的无监督表示能力强而广泛使用

用三个RBM和一个RBM-AE的DBN-RBM算法来预测情感状态

将DBN-RBM与SVM和隐马尔可夫模型(HMM)相结合来预测情感状态

提出五个隐层的D-RBM算法,用于情感识别中重要的频率模式(??)和信息通道(了解情感的渠道)的搜索

首先剔除高误差通道,然后根据剩余通道的代表性特征,采用D-RBM方法进行情感状态识别

双模深度自动编码器(BDAE)来研究男性和女性的情绪模式差异,该模型同时接收EEG和眼球运动特征,并在融合层中共享信息,该层与支持向量机分类器相连接。

结果:女性在恐惧情绪上具有较高的脑电信号差异性,而男性在悲伤情绪上具有较高的差异性。

跨个体 (克服不同受试者或不同实验阶段采集的样本之间的不匹配分布):结合AE和子空间对齐方法,提出了一种无监督域自适应技术,称为子空间对齐自动编码器(SAAE)。在独立场景下,该方法的平均准确率为77.88% 。[94]

混合模型:常用的是RNN+MLP

采用LSTM架构从情绪EEG信号中提取特征,并将特征转发到MLP中进行分类

多视角集成分类器,利用多模态生理信号识别个体情绪。集成分类器包含多个D-AEs(去噪自编码器?),具有三个隐藏层和一个融合结构。

然后将D-AE的输出发送到由另一个D-AE组成的融合结构

结合DBN-RBM有效特征提取和MLP进行分类,实现了一个情感识别系统

利用CNN结构提取多通道EEG信号的合适特征,将阿尔茨海默病从轻度认知障碍患者和健康对照组中进行分类

回声状态网络(ESNs)模型,它是RNN的一个特殊类别,用来区分RBD和健康个体

眼运动行为障碍(RBD)可引起帕金森病(PD)等多种精神障碍疾病

使用CNN提取潜在特征,并输入随机森林分类器,以最终检测新生儿癫痫发作

表示模型:对于疾病检测,一种常用的方法是采用具有代表性的模型(例如DBN),然后使用softmax层进行分类

采用DBN-AE从癫痫发作的EEG信号中提取信息特征。将提取的特征输入到传统的logistic回归分类器中进行癫痫检测。

多视图DBN-RBM结构来分析抑郁症患者的EEG信号。

该方法包含多个输入通路,由两个RBM组成,每个通路对应一个EEG通道。

所有的输入路径将合并成一个由另一个RBMs组成的共享结构。

混合模型:一种流行的混合方法是RNN和CNN的结合

研究了CNN-LSTM在信道选择后检测癫痫的性能,灵敏度(实际真,结果真)在33%到37%之间,而假警报(实际假,测出真)在38%到50%之间。

通过整合时间和空间信息来自动解释EEG。二维和一维cnn捕捉空间特征,LSTM网络捕捉时间特征。

将D-AE和MLP结合起来。

首先用0.5∼70hz的带通滤波后,将其送入具有两个隐藏层的D-AE中进行特征表示。

最后,在局部数据集上,MLP分类器的准确率达到81∼83%。

卷积自动编码器,用卷积和去卷积层代替标准声发射中的完整连接层,以无监督的方式提取癫痫特征

从时间采样、通道批量和窗口采样三个层面对DBN-RBM进行了评估。实验表明,通道批量的性能优于其他两级。

大脑信号控制的外骨骼可以帮助那些在行走和日常活动中损坏下肢运动系统的残疾人。

其他一维信号(如EEG)可以转换成二维图像,供CNN进一步分析。转换方式如下:

1)将每个时间点转换为二维图像;假设我们有32个通道,我们可以在每个时间点收集32个元素(每个元素对应于一个通道)。收集到的32个元素可以根据空间位置转换为二维图像。

2)将一个片段转换为二维矩阵。假设我们有32个通道,段包含100个时间点。所收集的数据可以被安排为一个形状为[32,100]的矩阵

CNN使用:

只有20%的基于判别模型的论文采用RNN,这远远低于我们的预期,因为RNN在时间特征学习方面表现出了强大的能力。造成这种现象的一个可能原因是RNN对长序列的处理非常耗时,而且脑电信号通常是长序列

MLP算法由于其简单的深度学习结构,其有效性(如非线性能力)不如其他算法,因而不受欢迎。

学习视频:https://www.bilibili.com/video/BV1dt411U7ad?from=search&seid=10143340215037418101

F1值

每到一个时间步(可以定义为1秒或者1分),每台机器进行一个行为选择

最终优先级:如果该Job已分配的行为数量等于|M|-1,说明最后一个动作已经确定了要在哪里执行。

奖励函数:作者定义了一个schedule area,在这区间内计算reward,可以获得更多个奖励函数,从而为强化学习使用。

ray能够快速使得模型快速落地,并变成分布式,相比较spark更简单,更便捷

之后打算:

进一步看懂代码,模型代码很少,基本已看完,但环境的代码很复杂,还没看完。

先使用pytorch替换掉里面的ray的ppo模型,然后逐步调试,理解环境部分的代码

似乎JSSP问题是每个Job在每台机器上都有执行一定时间,还是说我看到的刚好是特例?

R ( s , a ) = p a j − ∑ m ∈ M empty m ( s , s ′ ) p a j 是作到某个时刻的已排程时间总和 a 表示当前行为 , s 表示当前状态 , s ′ 表示下一个状态 e m t p t y m ( s , s ′ ) 是计算机器 m 在两个状态之间的 i d l e 时间 在实现的代码中,作者将所有的 R 都除以一个最大的工序加工时间来达到缩放 R(s, a)=p_{a j}-\sum_{m \in \mathcal{M}} \operatorname{empty}_{m}\left(s, s^{\prime}\right) \\ p_{aj}是作到某个时刻的已排程时间总和\\ a表示当前行为,s表示当前状态,s^{\prime}表示下一个状态 \\ emtpty_{m}(s, s^{\prime})是计算机器m在两个状态之间的idle时间\\ 在实现的代码中,作者将所有的R都除以一个最大的工序加工时间来达到缩放 R ( s , a ) = p aj − m ∈ M ∑ empty m ( s , s ′ ) p aj 是作到某个时刻的已排程时间总和 a 表示当前行为 , s 表示当前状态 , s ′ 表示下一个状态 e m tpt y m ( s , s ′ ) 是计算机器 m 在两个状态之间的 i d l e 时间 在实现的代码中,作者将所有的 R 都除以一个最大的工序加工时间来达到缩放

心理学随机森林 用机器学习(即随机森林):

关系质量的最高预测因子是:(RF里的重要性?)

个体差异最大的预测因子:(方差?)

??特定于关系的变量在基线时 预测的方差高达45%,在每个研究结束时预测的方差高达18%。

Overall, relationship-specific variables predicted up to 45% of variance at baseline, and up to 18% of variance at the end of each study.

??个体差异和伴侣报告除了行为人报告的关系特异性变量外,没有预测效果。

Importantly, individual differences and partner reports had no predictive effects beyond actor-reported relationship- specific variables alone.

结论:

所有个体差异和伴侣经验的总和通过一个人的特定关系经验对关系质量产生影响 ,并且由于个体差异的调节和伴侣报告的调节而产生的影响可能很小 。

??最后,通过任何自我报告变量的组合,发现关系质量的变化 (即,在研究过程中关系质量的增加或减少)在很大程度上是不可预测的 。

已经确定了数百个据称影响浪漫关系质量的变量 。目前的项目使用机器学习来直接量化 和比较11196对浪漫夫妇中许多这样的变量的预测能力 。

人们对关系有自己的判断,比如他们对伴侣的满意程度 和忠诚程度 ,感激程度 ,解释了他们目前满意度的45%。

建立分类树和回归树

随机森林方法使用一个随机的预测器和参与者子集 ,通过一个称为递归分区 的过程,一次一个地测试每个可用预测器的强度 。

举例能够预测非线性关系:

For example, a model with actor- and partner-reported predictors would detect any robust actor × partner interactions (e.g., moderation, attenuation effects, matching effects) that could not be captured in a model featuring actor- or partner-reported predictors alone.

参数设置:

ntree = 5000,树的数量

mtry = 1/3,每个节点上可用于拆分的预测因子数量

R语言中的VSURF包:An R Package for Variable Selection Using Random Forests Robin Genuer

输出:

每个模型都显示了模型解释的总方差量 ,以及作为预测变量的具体变量

模型设置:

对每个数据集进行了21个随机森林模型,

类似地,我们对每个包含忠诚 的数据集(即我们的次要因变量)进行了21个随机森林模型,每个数据集总共有42个随机森林模型(最大值)。

总共有43个数据集,每个数据集最大42个模型

每个数据集的结果在 https://osf.io/4pbfh/

42个模型中的每一个都作为一个??单独的随机效应元分析 进行了检验;

21个满意度荟萃分析分别包含k=43个效应量(effect sizes),每个数据集对应一个21个满意度模型

21个忠诚度元分析每个包含k=31个效应量。有31个数据集有忠诚数据,每个数据集对应一个21个忠诚度模型

满意度和忠诚度的元分析数据文件在https://osf.io/v5e34/。

调节分析Moderation Analyses

分析12种可能的元分析调节因子,

10个是数据集的特征:研究长度、时间点之间的长度、时间点的数量、样本的平均关系长度、样本的平均年龄、开始收集数据的年份、国家、出版状态(至少有1个出版物与未出版过的文献)、样本类型(社区与大学生)、关系状况(约会vs.已婚)。

使用了

初步荟萃结分析结果Primary Meta-Analytic Results

元分析调节Meta-Analytic Moderators

预测限制效应Predictor Restriction Effects

具体结构的预测成功Predictive Success of Specific Constructs

两个问题:

答案:

如果人们设法建立起一种以欣赏、性满足和较少冲突 为特征的关系,并且他们认为他们的伴侣是忠诚的和有反应的 ,那么这些个人的风险因素可能无关紧要。

关系质量可以从各种结构中预测,但有些结构比其他结构更重要,而最接近的预测因素是表征一个人对关系本身感知的特征。

P300数据学习 6次行,6次列,分别有一行和一列包含目标字符,此时引起的反应,类似P300

文件名:example.m

该程序比较session10-run01数据集中,对目标与非目标刺激(即,包含/未包含所需字符的刺激)的响应

文件名:testclass.m

使用一个非常简单的分类器来预测session12-run01中单词的第一个字符

它在增强后使用Cz和310ms处的一个样本进行分类。 它确定目标字符为振幅最高的字符(Cz / 310ms)

它针对session12中的单词01中的第一个字符执行此操作

肾透析移植机器学习 本文综述人工智能/机器学习(AI/ML)算法在肾替代治疗(血液透析,腹膜透析和肾移植)中的研究现状及其影响。

研究了三个医疗领域关于血液透析(hemodialysis,HD),腹膜透析(peritoneal dialysis,PD),肾移植(kidney transplantation,KT)的四个使用AI/ML的电子数据库或研究。

所以分为了HD,PD,KT三类。

AI能比肾脏学家更好的预测:体积,KT/V(一定透析时间内透析器对尿素的清除量与体积的比值。),透析期间发生低血压或心血管事件。

这些实验报告了AI/ML对G5D/T患者的生活质量和生存期的强大影响。

未来几年,人们可能会看到AI/ML设备的出现,它有助于透析患者的管理,从而提高生活质量和生存。

CKD: chronic kidney disease,慢性肾脏病

G5D/T: 终末期肾脏病,需要长期透析治疗。

FDA: US Food and Drug Administration,美国食品和药物监管局

ESRD: end-stage renal disease,终末期肾病患者

RCTs: randomized controlled trial,随机对照试验

AI/ML的方法已经步入平稳期: 美国食品和药物管理局发布了监管框架,用于修改基于AI/ML的软件作为医疗设备。该委员会去年批准了至少15个涉及医疗领域的人工智能/深度学习平台(例如,用于房颤检测、CT脑出血诊断、冠状动脉钙化评分、辅助中风诊断或乳房x线摄影乳房密度)。

有数据集但没成功: 在过去的15年里,许多问题和并发症产生的终末期肾病需要透析,使得人工智能算法得到了初始输入。但上述的成功案例均未发现。

成功案例引起肾学家思考 :医学图像处理对医疗保健的重大影响,手术中的智能机器人,苹果手表对心房颤动检测的影响。

思考 :

下一步目标 :用AI加强透析机(也就是人工肾)的功能。

理论基础 :2019年的论文——基于透析患者特征、历史血流动力学反应和透析相关处方,开发了一个多终点模型来预测特定时段的Kt/V、液体容量去除、心率和血压,

理论意义 :为关于ESRD患者的人工智能研究打开了大门,ml驱动的机器连续自主地改变参数(温度、透析液电解质成分、持续时间和超滤速率),避免透析过程中糟糕的情况(例如低血压)。从而告诉我们,肾脏学是一门“个体化医学”,因为透析过程不一样。

遵循PRISMA指导原则(详看上述专有名词)

搜索PubMed、SCOPUS、Web of Science和EBSCO的电子数据库,最早的论文为2019年8月。

筛选阶段获得77篇论文,通过两名审稿人讨论和协商后,69项符合标准。(对应表1-表3)

图1:筛选过程分为四个流程:

阅读题目和摘要后再剔除,189篇重复,68篇不是手稿(手写),7篇没有临床,13篇没有文本

图2:所有研究分为三大类,12小类:

注:他克莫斯移植后,而不是后移植,指治疗调节。

实验的特征 :

大多数研究是观察性的,除了一个随机对照实验(RCT),超过60%的研究是在2010年之后报道的。

大多数HD(血液透析)研究涉及个体化贫血管理和透析过程参数。准确预测移植排斥反应 或移植后个体化免疫抑制治疗 是AI和KT(肾透析)试验的主要主题。

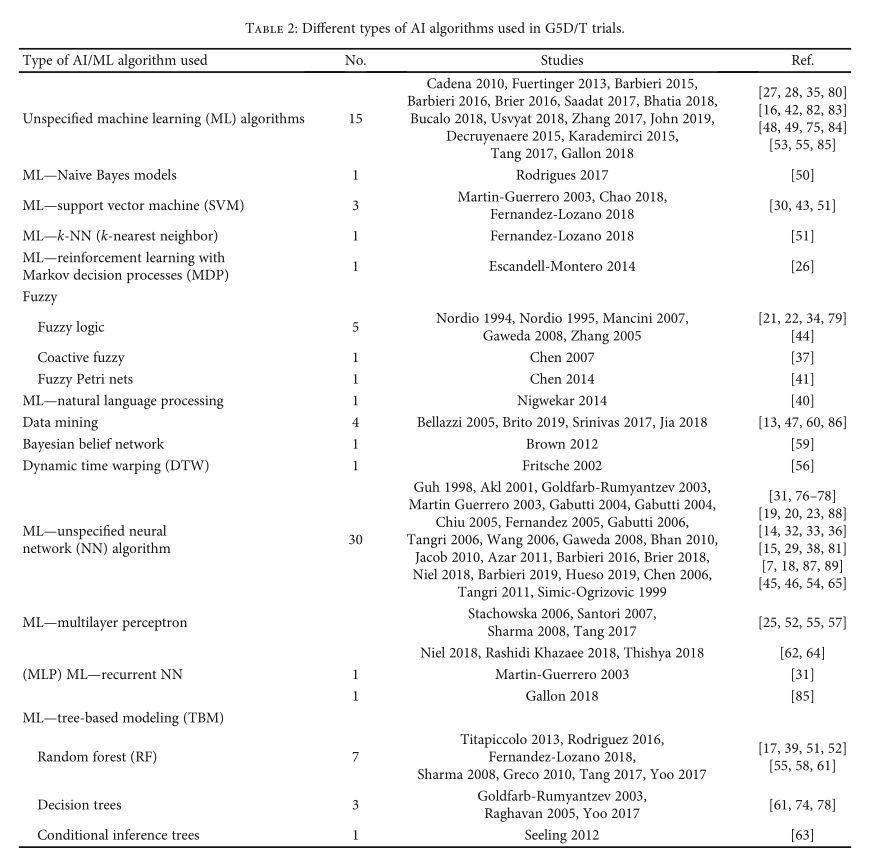

表2:根据AI算法的类型进行分类:

算法分析 :

64项研究包括ML算法: 未指定的、朴素贝叶斯模型、支持向量机(SVM)和马尔可夫决策过程强化学习 (MDP)。

1个K近邻,1个MLP,30个未指定的神经网络算法。

11项研究基于树的模型(TBM),随机森林(RF)或条件推理树

3.1.1 Key Message

人工智能如何改善向HD(血液透析)提供的医疗服务?

预防

人工智能能够为临床结果不令人满意的HD实验确定风险概况。

实验一

一个MLP模型使用了来自111名尿毒症患者5年PD数据库的透析前数据并证明了该方法将透析前患者分为高转运蛋白组和低转运蛋白组有效性。

可以为尿毒症患者提供更好的治疗选择,这将改善PD患者的预后,降低发病率和死亡率。

实验二

利用反向传播方法构造和训练了73-80-1节点结构的MLP,确定PD技术失败的相关因素,以便开发干预措施以减轻风险因素

3.2.1Key Message

人工智能的使用如何改善向PD(腹膜透析)提供的医疗服务?

预防

人工智能被用来确定PD技术失败的相关因素,指定干预措施以减轻风险因素

实验一

NNs可用于预测慢性肾移植排斥反应(作者描述了对27例慢性排斥反应患者的回顾性分析,八个简单变量对排斥反应有很大影响)

实验二

一项对2005年至2011年500名患者的研究使用最大似然算法(SVM、随机森林和离散余弦变换)预测“延迟移植功能”,结果表明线性SVM具有最高的鉴别能力(AUROC为84.3%),优于其他方法。

3.3.1Key Message

人工智能的使用如何改善向KT提供的医疗服务?

诊断

AI能够通过识别一系列实验室数据中的异常模式来检测和报告急性KT排斥反应相关的早期肌酐病程,从而允许快速干预和改善KT病人的后遗症

Contrastive Multi-View Representation Learning on Graphs 2数据操作 2.2.1创建tensor的函数

还有很多函数可以创建

图神经网络基础 节点有节点的属性,边有边的属性

节点可以分为:labeled node和unlabeled node。

图卷积:

graph >> spatial-based convolution(空间卷积)

复制 15 15 # 作业数,机器数

6 94 12 66 4 10 7 53 3 26 2 15 10 65 11 82 8 10 14 27 9 93 13 92 5 96 0 70 1 83 # 奇数是机器索引,偶数是在该机器上的加工时间

4 74 5 31 7 88 14 51 13 57 8 78 11 8 9 7 6 91 10 79 0 18 3 51 12 18 1 99 2 33

1 4 8 82 9 40 12 86 6 50 11 54 13 21 5 6 0 54 2 68 7 82 10 20 4 39 3 35 14 68

5 73 2 23 9 30 6 30 10 53 0 94 13 58 4 93 7 32 14 91 11 30 8 56 12 27 1 92 3 9

7 78 8 23 6 21 10 60 4 36 9 29 2 95 14 99 12 79 5 76 1 93 13 42 11 52 0 42 3 96

5 29 3 61 12 88 13 70 11 16 4 31 14 65 7 83 2 78 1 26 10 50 0 87 9 62 6 14 8 30

12 18 3 75 7 20 8 4 14 91 6 68 1 19 11 54 4 85 5 73 2 43 10 24 0 37 13 87 9 66

11 32 5 52 0 9 7 49 12 61 13 35 14 99 1 62 2 6 8 62 4 7 3 80 9 3 6 57 10 7

10 85 11 30 6 96 14 91 0 13 1 87 2 82 5 83 12 78 4 56 8 85 7 8 9 66 13 88 3 15

6 5 11 59 9 30 2 60 8 41 0 17 13 66 3 89 10 78 7 88 1 69 12 45 14 82 4 6 5 13

4 90 7 27 13 1 0 8 5 91 12 80 6 89 8 49 14 32 10 28 3 90 1 93 11 6 9 35 2 73

2 47 14 43 0 75 12 8 6 51 10 3 7 84 5 34 8 28 9 60 13 69 1 45 3 67 11 58 4 87

5 65 8 62 10 97 2 20 3 31 6 33 9 33 0 77 13 50 4 80 1 48 11 90 12 75 7 96 14 44

8 28 14 21 4 51 13 75 5 17 6 89 9 59 1 56 12 63 7 18 11 17 10 30 3 16 2 7 0 35

10 57 8 16 12 42 6 34 4 37 1 26 13 68 14 73 11 5 0 8 7 12 3 87 2 83 9 20 5 97

复制 import pandas as pd

import numpy as np

from scipy.io import loadmat

复制 # AAS011R06.mat

m = loadmat('Data/P300/v2/AA001.mat')

m

复制 {'__header__': b'MATLAB 5.0 MAT-file, Platform: PCWIN, Created on: Thu Nov 29 14:36:17 2001',

'__version__': '1.0',

'__globals__': [],

'run': array([[3],

[3],

[3],

...,

[8],

[8],

[8]], dtype=uint8),

'trial': array([[ 0],

[ 0],

[ 0],

...,

[192],

[192],

[192]], dtype=uint8),

'sample': array([[ 0],

[ 1],

[ 2],

...,

[28829],

[28830],

[28831]], dtype=uint16),

'signal': array([[-1136, -416, -592, ..., -816, -496, -624],

[-1456, -912, -752, ..., -48, 336, -48],

[-1888, -912, -480, ..., -240, 0, 64],

...,

[-1952, -2416, -2336, ..., -1376, -2096, -1168],

[-2784, -2912, -2912, ..., 144, -800, -48],

[-1872, -1168, -1264, ..., 1008, 304, 816]], dtype=int16),

'TargetCode': array([[0],

[0],

[0],

...,

[0],

[0],

[0]], dtype=uint8),

'ResultCode': array([[0],

[0],

[0],

...,

[0],

[0],

[0]], dtype=uint8),

'StimulusTime': array([[51992],

[51992],

[51992],

...,

[54165],

[54165],

[54165]], dtype=uint16),

'Feedback': array([[0],

[0],

[0],

...,

[0],

[0],

[0]], dtype=uint8),

'IntertrialInterval': array([[1],

[1],

[1],

...,

[1],

[1],

[1]], dtype=uint8),

'Active': array([[1],

[1],

[1],

...,

[1],

[1],

[1]], dtype=uint8),

'SourceTime': array([[52082],

[52082],

[52082],

...,

[54256],

[54256],

[54256]], dtype=uint16),

'RunActive': array([[1],

[1],

[1],

...,

[1],

[1],

[1]], dtype=uint8),

'Recording': array([[1],

[1],

[1],

...,

[1],

[1],

[1]], dtype=uint8),

'IntCompute': array([[0],

[0],

[0],

...,

[0],

[0],

[0]], dtype=uint8),

'Running': array([[1],

[1],

[1],

...,

[1],

[1],

[1]], dtype=uint8)}

复制 for i in m:

try:

print(i,m[i].shape)

except:

continue

# 运行编号(runnr),运行内的强化次数(trinr)和运行内的样品编号(sample)

# 其他部分由于网页乱码看不出来,也可能说明文件中没有展示,需要浏览matlab文件

复制 run (172992, 1)

trial (172992, 1)

sample (172992, 1)

signal (172992, 64)

TargetCode (172992, 1)

ResultCode (172992, 1)

StimulusTime (172992, 1)

Feedback (172992, 1)

IntertrialInterval (172992, 1)

Active (172992, 1)

SourceTime (172992, 1)

RunActive (172992, 1)

Recording (172992, 1)

IntCompute (172992, 1)

Running (172992, 1) 每个字符:

矩阵显示时间2.5s,此时字符强度相等 ==> 视为空白

每一行和每一列被随机增强100ms,增强后,空白75ms

每次实验的单个通道信号量 = {2.5s + [(100+75) x 180 x 字符数]/1000 + 2.5s }x 240 Hz

台湾陈蕴侬视频2020 二、模型结构、损失函数、优化、反向传播

偏差(bias)的理解:相当于给一个初值,然后通过学习调整这个初值。

感知层(perception layer)的理解:每一层相当于一个切割,可以通过二层模拟出一个凸,越多层表达越多场景。

激活函数(activate function):选非线性的,线性跟权重没差。

损失函数(loss function):定义一个损失值,越小越接近正确的参数值。

梯度下降(Gradient Descent)的理解:越倾斜,下降越快,越平稳下降越慢;容易达到局部最小值,卡在局部。随机小批量梯度下降(SGD,选1个)比较快。小批量梯度下降(Mini-Batch GD,选k个).

训练速度:mini-batch>SGD>GD,因为现代电脑矩阵相乘的速度大于矩阵和向量相乘。

学习率:过大会学习过头,越过最小值。过小会学的很慢。

建议:1.数据随机;2.使用固定批量;3.调整学习率。

反向传播(backward propagation):通过梯度和学习率更新权重。其实就是微积分链式法则在模型中的体现。反向传播计算出的梯度乘以前向计算的结果,就是下一个变数的偏微分了。

共现矩阵:表示一起出现过的单词的关系。

奇异值分解(singular value decomposition,SVD):降低维度。

SVD问题:计算复杂度过高,难以加新词。

解决方法:降低维度,通过embedding的方法嵌入一个空间中的位置。常用word2vec,Glove方法。

知识型表示(knowledge-based representation):通过符号等来表示知识(知识图谱)

语料库表示(corpus-based representation):基于近邻的高维(共现矩阵),低维(降维或embedding);原子特征(atomic symbol,one-hot向量)

循环神经网络(recurrent neural net,RNN):将前面的影响传递给后面的网络。

梯度消失,梯度爆炸(Vanishing/Exploding Gradient):指数太多次,导致大的越大,小的越小。解决方法:裁剪(clipping)

双向循环神经网络(Bidirectional RNN):当时间可以双向的时候,可以使用。(不能预测股市这种单向时间的)

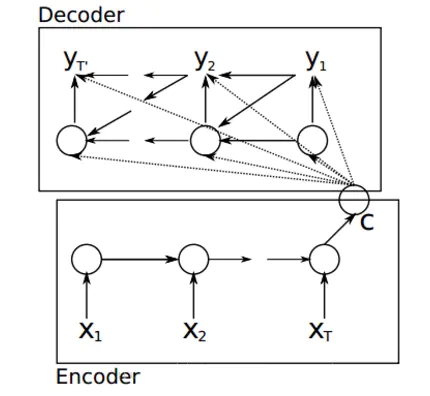

编码器-解码器:编码器生成W或背景向量C,解码器利用编码器结果来生成输出。

批量归一化计算:先归一化,后缩放和平移。

从经验法则来讲,L2正则化一般比L1正则化有效。

编码器-解码器实现注意力机制:编码器收集信息,收集完一整句(注意力在这)之后,保存在编码器,用解码器生成输出,直到遇到。

Q,K,V:Q是指query,K是指编码器中的key,V是指最后一层的Value。

最大化和最小化:多个概率相乘求其最大值,相当于对其求log后加个负号求最小值。也就是说,凡是求最大值的,都可以通过符号变成求最小值。

《第一章》 表示学习 ( Representation Learning):如果有一种算法可以自动地学习出有效的特征, 并提高最终机器学习模型的性能 .

局部表示 ( Local Representation):也叫离散表示,符号表示,例如one-hot向量。

分布式表示 ( Distributed Representation):例如RGB值,通常表示低维的稠密向量。

嵌入 (Embedding):使用神经网络,将高维的局部表示空间映射到非常低维的分布式表示空间,在这个低维的空间中,每个特征不再是坐标轴上的点,而是分散在整个低维空间中。自然语言中的分布式表示,也叫词嵌入 。

深度学习 (Deep Learning):为了学习一种好的表示, 需要构建具有一定“深度”的模型, 并通过学习算法来让模型自动学习出好的特征表示( 从底层特征, 到中层特征, 再到高层特征),从而最终提升预测模型的准确率. 所谓“深度” 是指原始数据进行非线性特征转换的次数 . 如果把一个表示学习系统看作是一个有向图结构, 深度也可以看作是从输入节点到输出节点所经过的最长路径的长度. 某种意义上可以看作一种强化学习(Reinforcement Learning)

深度学习采用的模型主要是神经网络模型 , 其主要原因是神经网络模型可以使用误差反向传播算法, 从而可以比较好地解决贡献度分配问题. 随着模型深度的不断增加, 其特征表示的能力也越来越强, 从而 使后续的预测更加容易

端到端学习 ( End-to-End Learning): 也称端到端训练, 是指在学习过程中不进行分模块或分阶段训练, 直接优化任务的总体目标. 在端到端学习中, 一般不需要明确地给出不同模块或阶段的功能, 中间过程不需要人为干预. 大部分采用神经网络模型的深度学习可以看作一种端到端的学习。

神经网络 (机器学习领域):由很多人工神经元构成的网络结构模型, 这些人工神经元之间的连接强度是可学习的参数.

赫布型学习 ( Hebbian learning):如果两个神经元总是相关联地受到刺激, 它们之间的突触强度增加.

凝固作用 :短期记忆转化为长期记忆的过程

网络容量 ( Network Capacity):指人工神经网络塑造复杂函数的能力, 与可以被储存在网络中的信息的复杂度以及数量相关

1.8总结

特征工程 :要开发一个实际的机器学习系统, 人们往往需要花费大量的精力去尝试设计不同的特征以及特征组合, 来提高最终的系统能力。

MXNet 主要是在20年暑假期间学习开源框架MXNet的开源书籍

《动手学习深度学习》:https://zh.d2l.ai/chapter_preface/preface.html

复制 torch.cuda.is_available()

复制 # 未初始化的矩阵

# 里面的值是不确定的

x = torch.empty(5,3)

print(x)

复制 # 构造随机初始化的矩阵

x = torch.rand(5,3)

x

复制 import torch

# 神经网络的包,仅支持小批量样本的训练,不支持单个样本(可以input.unsqueeze(0)来模拟批量[1])

import torch.nn as nn

import torch.nn.functional as F # 一些常用的函数

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 6, 3) # 卷积核

self.conv2 = nn.Conv2d(6, 16, 3) # 卷积核

# Linear是简单的映射函数,第一个参数w的维度,第二个参数b的维度

self.fc1 = nn.Linear(16 * 6 * 6, 120) # 6*6是图片的长宽

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10) # 最后输出10个分类

def forward(self, x):

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2)) # 最大二维池化层:2*2格子内取最大

x = F.max_pool2d(F.relu(self.conv2(x)), 2) # 第一个参数的结果是笔直的向量

x = x.view(-1, self.num_flat_features(x)) # -1表示自动计算,view是改变形状

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

def num_flat_features(self, x): # 每个输入变平后的数量大小

size = x.size()[1:] # 获得除了批量大小的所有维度

num_features = 1

for s in size:

num_features *= s

return num_features

net = Net()

print(net) # 获取当前模型的数据

复制 Net(

(conv1): Conv2d(1, 6, kernel_size=(3, 3), stride=(1, 1))

(conv2): Conv2d(6, 16, kernel_size=(3, 3), stride=(1, 1))

(fc1): Linear(in_features=576, out_features=120, bias=True)

(fc2): Linear(in_features=120, out_features=84, bias=True)

(fc3): Linear(in_features=84, out_features=10, bias=True)

)

复制 print(params[1]) # conv1的偏移

复制 tensor([[1.6880e+25, 2.5226e-18, 6.6645e-10],

[4.1575e+21, 1.3294e-08, 2.0773e+20],

[1.6536e-04, 1.0016e-11, 8.3391e-10],

[2.1029e+20, 2.0314e+20, 3.1369e+27],

[7.0800e+31, 3.1095e-18, 1.8590e+34]])

复制 tensor([[0.3129, 0.8714, 0.8079],

[0.4722, 0.3522, 0.3068],

[0.9920, 0.0171, 0.6463],

[0.1151, 0.7443, 0.1300],

[0.2816, 0.7904, 0.1833]])

复制 # 构造一个填充0,且数据类型dtype为long的矩阵

x = torch.zeros(5, 3, dtype=torch.long)

x

复制 tensor([[0, 0, 0],

[0, 0, 0],

[0, 0, 0],

[0, 0, 0],

[0, 0, 0]])

复制 # 将数组转换从tensor张量

x = torch.tensor([5.5, 3])

x

复制 tensor([5.5000, 3.0000])

复制 # 拷贝一个值

x = x.new_ones(5, 3, dtype=torch.double) # new_* methods take in sizes

print(x)

复制 tensor([[1., 1., 1.],

[1., 1., 1.],

[1., 1., 1.],

[1., 1., 1.],

[1., 1., 1.]], dtype=torch.float64)

复制 # 拷贝形状,并随机赋值-1到1之间

x = torch.randn_like(x, dtype=torch.float) # override dtype!

print(x) # result has the same size

复制 tensor([[0.1310, 0.8429, 0.9671],

[0.4961, 0.4118, 0.5708],

[0.9019, 0.1656, 0.9630],

[0.1138, 0.5194, 0.2060],

[0.0544, 0.8853, 0.4521]])

复制 # 拷贝形状,并随机赋值0到1之间

x = torch.rand_like(x, dtype=torch.float) # override dtype!

print(x) # result has the same size

复制 tensor([[0.2949, 0.5003, 0.8243],

[0.0197, 0.8175, 0.7986],

[0.2791, 0.1747, 0.9388],

[0.6410, 0.7757, 0.9517],

[0.5885, 0.8757, 0.0301]])

复制 # 获取矩阵大小

print(x.size())

复制 # 加法一,会生成新变量

y = torch.rand(5, 3)

print(x + y)

# 加法二,会生成新变量

print(torch.add(x, y))

复制 tensor([[0.8205, 1.1127, 0.9667],

[1.0123, 1.6212, 1.7500],

[0.7720, 0.5131, 1.8308],

[1.1216, 1.0681, 1.8199],

[1.4038, 1.3346, 0.7781]])

tensor([[0.8205, 1.1127, 0.9667],

[1.0123, 1.6212, 1.7500],

[0.7720, 0.5131, 1.8308],

[1.1216, 1.0681, 1.8199],

[1.4038, 1.3346, 0.7781]])

复制 # 加法三,设定指定的变量result为输出

result = torch.empty(5, 3)

torch.add(x, y, out=result)

print(result)

复制 tensor([[0.8205, 1.1127, 0.9667],

[1.0123, 1.6212, 1.7500],

[0.7720, 0.5131, 1.8308],

[1.1216, 1.0681, 1.8199],

[1.4038, 1.3346, 0.7781]])

复制 # 加法四:就地加法,不会生成新变量,但会改变其中一个参数

y.add_(x)

print(y)

复制 tensor([[0.8205, 1.1127, 0.9667],

[1.0123, 1.6212, 1.7500],

[0.7720, 0.5131, 1.8308],

[1.1216, 1.0681, 1.8199],

[1.4038, 1.3346, 0.7781]])

复制 tensor([[0.8205, 1.0123, 0.7720, 1.1216, 1.4038],

[1.1127, 1.6212, 0.5131, 1.0681, 1.3346],

[0.9667, 1.7500, 1.8308, 1.8199, 0.7781]])

复制 # 拷贝

x.copy_(y.t_())

# 任何使得张量发生变化的操作都需要添加下划线_

复制 tensor([[0.8205, 1.1127, 0.9667],

[1.0123, 1.6212, 1.7500],

[0.7720, 0.5131, 1.8308],

[1.1216, 1.0681, 1.8199],

[1.4038, 1.3346, 0.7781]])

复制 # 输出所有列,第1行

print(x[:, 1])

复制 tensor([1.1127, 1.6212, 0.5131, 1.0681, 1.3346])

复制 # 通过view改变形状

x = torch.randn(4, 4)

y = x.view(16)

z = x.view(-1, 8) # -1表示根据其他维度来推断

print(x.size(), y.size(), z.size())

复制 torch.Size([4, 4]) torch.Size([16]) torch.Size([2, 8])

复制 # 张量转化为数字

x = torch.randn(1)

print(x)

print(x.item())

复制 tensor([1.2919])

1.291871190071106

复制 # torch转NumPy

# 两个变量共享内存

a = torch.ones(5)

print(a)

b = a.numpy()

print(b)

a.add_(1)

print(a)

print(b)

复制 tensor([1., 1., 1., 1., 1.])

[1. 1. 1. 1. 1.]

tensor([2., 2., 2., 2., 2.])

[2. 2. 2. 2. 2.]

复制 # NumPy转torch

# 自动转化

import numpy as np

a = np.ones(5)

b = torch.from_numpy(a)

np.add(a, 1, out=a)

print(a)

print(b)

# CharTensor不支持转化为NumPy

复制 [2. 2. 2. 2. 2.]

tensor([2., 2., 2., 2., 2.], dtype=torch.float64)

复制 # 以下代码只有在PyTorch GPU版本上才会执行

if torch.cuda.is_available():

device = torch.device("cuda") # GPU

y = torch.ones_like(x, device=device) # 直接创建一个在GPU上的Tensor

x = x.to(device) # 改变环境,等价于 .to("cuda")

z = x + y

print(z)

print(z.to("cpu", torch.double)) # to()还可以同时更改数据类型

复制 tensor([1.1416, 2.4353], device='cuda:0')

tensor([1.1416, 2.4353], dtype=torch.float64)

复制 tensor([1., 1.], device='cuda:0')

复制 params = list(net.parameters())

print(len(params)) # 自动学习参数的数量

print(params[0].size()) # 6*1*3*3 ,conv1的权重

复制 10

torch.Size([6, 1, 3, 3])

复制 Parameter containing:

tensor([ 0.0176, 0.2514, 0.1497, 0.1647, -0.2829, 0.0409],

requires_grad=True)

复制 input = torch.randn(1,1,32,32)

out = net(input)

print(out) # 输出,数值最大的是正确分类,但一开始没有训练是随机的

复制 tensor([[ 6.9398e-03, -6.6113e-05, -6.9214e-02, -7.6395e-02, 3.9166e-02,

-7.0978e-02, 1.2993e-02, -1.7690e-02, -1.4292e-02, -4.7204e-02]],

grad_fn=<AddmmBackward>)

复制 net.zero_grad() # 设置所有梯度为0

out.backward(torch.randn(1,10)) # 设置随机数为梯度

复制 output = net(input)

target = torch.randn(10) # 假设一个假的结果

target = target.view(1, -1) # 变为[1,10]形状

criterion = nn.MSELoss() # 均方误差

loss = criterion(output, target)

print(loss)

复制 tensor(0.7828, grad_fn=<MseLossBackward>)

复制 """

input -> conv2d -> relu -> maxpool2d -> conv2d -> relu -> maxpool2d

-> view -> linear -> relu -> linear -> relu(3) -> linear(2)

-> MSELoss(1)

-> loss

"""

print(loss.grad_fn) # MSELoss

print(loss.grad_fn.next_functions[0][0]) # Linear

print(loss.grad_fn.next_functions[0][0].next_functions[0][0]) # ReLU

复制 <MseLossBackward object at 0x7fefaac1c8d0>

<AddmmBackward object at 0x7fefaac1ca90>

<AccumulateGrad object at 0x7fefaac1c8d0>

复制 net.zero_grad()

print('conv1.bias.grad before backward') # 反向传播之前

print(net.conv1.bias.grad)

loss.backward()

print('conv1.bias.grad after backward') # 反向传播后

print(net.conv1.bias.grad)

复制 conv1.bias.grad before backward

tensor([0., 0., 0., 0., 0., 0.])

conv1.bias.grad after backward

tensor([-0.0158, -0.0015, -0.0017, 0.0073, 0.0074, -0.0123])

复制 # 更新权重

learning_rate = 0.01

for f in net.parameters():

f.data.sub_(f.grad.data * learning_rate) # sub_是减等于

复制 import torch.optim as optim # 优化算法包

optimizer = optim.SGD(net.parameters(), lr=0.01) # 创建优化算法器

optimizer.zero_grad() # 初始化梯度为0

output = net(input) # 前向传播

loss = criterion(output, target) # 计算损失

loss.backward() # 反向传播

optimizer.step() # 更新权重 感知伴侣满意度

依恋回避

这些变量并没有增加信息,都无法预测谁的关系质量会随着时间的推移而增加或减少

选择最好的预测器 构建决策树,并在未使用的数据上测试预测能力

分别产生了数千个决策树,然后使用平均值结合在一起。

为了计算每个效应量,我们将随机森林模型结果的“方差占比”转换为效应大小r(通过取平方根) ;

不纯度(impurity)在分类中通常为Gini不纯度或信息增益/信息熵,对于回归问题来说是方差。

概率越大,纯度(或熵)越小 ,即发生的不确定性越小

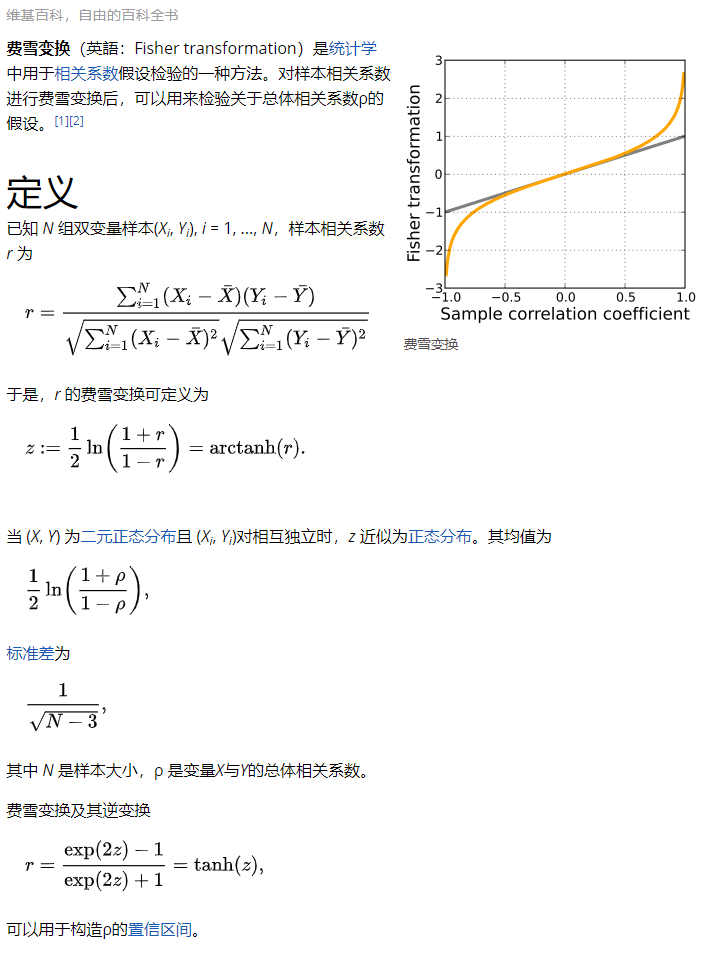

然后我们进行Fisher-zr变换,我们使用N-3作为逆方差权重,其中N等于随机森林中使用的观察数分析

z' = 0.5 * ln((1+z)/(1-z))

我们将meta分析的结果转换回结果中占百分比的方差(通过将值平方 )

阅读全文后再剔除,2篇重复,2篇不是手稿,1篇没有临床,3篇质量差

2项包括贝叶斯置信网络 和动态时间扭曲(DTW) 算法

早期检测可以及时修正风险因素达到高质量的HD实验和有利的结果

诊断

用人工智能评估动静脉瘘管的流通性可以改善HD实验的结果和病人的生命质量

通过用人工智能解决方案代替更昂贵的诊断流程能减少医疗成本

处方

人工智能推荐药物剂量预防HD相关的并发症如贫血和血红蛋白的波动、矿物质失衡等

算法的参与会导致更少的并发症和减少药物的使用,减少治疗费用

预测

特定的算法预测生命质量,对心血管的测量结果和透析内的血流动力学事件的变化

生存和生命质量预测模型可以减轻对公共卫生的影响,更好的指导资源的使用

预测血流动力学事件通过避免低血压、心率和容量的变异性以实时适应HD过程,从而保证HD实验的成功和整体成本效益的干预措施。

挑战和需要更多人工智能研究的HD领域

实时监控的人工智能系统通过在HD实验中的嵌入式自动适应性反应以实现个性化治疗

通过AI/ML系统和专家负责的HD实验的反馈实现两者之间潜在的相互作用,使得两个部分相互学习,为ESRD患者提供更好的决策。

更多的人工智能系统将部署在HD病人护理上,这会产生规模更大的数据。应促使制定有关数据隐私、维护和共享的严格法规,以便在公共医疗保健中更安全地实施

实验三

结合生物标记测量期间急性腹膜炎和基于支持向量机、神经网络和随机森林的特征选择方法,一项研究(包括83 PD患者腹膜炎)证实了先进的数学模型在分析复杂的生物医学数据集和强调感染部位病原体特异性炎症反应的关键途径方面的强大作用。

实验四

利用CAPD患者的数据挖掘模型,提取模式,根据他们的血液分析对卒中风险患者进行分类。在最近的一项研究中,分析了850个病例的数据集,五种不同的人工智能算法(朴素贝叶斯、逻辑回归、MLP、随机森林和k-NN)被用来预测患者的中风风险。RT和k-NN预测脑卒中风险的特异性和敏感性均为95%。

人工智能算法可以发现生物标志物特征与不同类型的感染之间的联系

对早期采取适当的治疗方法以及避免易感ESRD患者的严重感染并发症有巨大的意义

AI通过突出PD中病原体特异性炎症反应所涉及的关键途径,为扩大病理生理机制的科学知识做出贡献

处方

通过使用人工智能,将透析前患者分为高转运蛋白组和低转运蛋白组,可以为尿毒症患者提供更好的治疗选择,这将改善PD患者的预后(根据经验预测的疾病发展情况),降低发病率和死亡率

预测

算法可以识别患者中风的风险,因此允许早期干预和减少PD的住院数

挑战和需要更多人工智能研究的PD领域

人工智能可以用来识别有腹膜炎风险的患者,以降低感染风险,克服腹膜透析过程中的巨大负担

在自动化PD中开展家庭远程监测的研究可以改善患者的治疗结果和对治疗的坚持

对使用吸附剂技术的透析液再生制造自动化可穿戴人工肾PD装置的安全性的研究

实验三

DTW(动态时间规整算法)用于识别一系列实验室数据中的异常模式,从而早期发现并报告与急性排斥反应相关的肌酐病程[56]。对数据库中存储的1,059,403个实验室值、43,638个肌酐测量值、1143名患者和680次排斥事件进行数据提取。通过将人工智能集成到电子患者登记系统中,可以前瞻性地评估对移植受者护理的真正影响。

实验四

在一项对257名接受KT的儿科患者进行的回顾性研究中,采用了一个经过反向传播训练的MLP,使用20个简单的输入变量来确定血清肌酐的延迟下降(移植肾功能恢复的延迟)[57]。据报道,其他模型(决策树)突出了有移植物丢失风险的受试者。

实验五

使用USRDS数据库中的数据集(来自5144名患者的48个临床变量),开发了一个作为移植前器官匹配工具的M1软件(基于贝叶斯信念网络(BBN))。该模型可以预测第一年内移植失败,特异性为80%。

实验六

对1045名KT患者进行研究,8项ML技术接受了基于药物遗传算法的TSD()预测培训。确定了与TSD显著相关的临床和遗传因素。高血压、奥美拉唑的使用和CYP3A5基因型用于构建多元线性回归(MLR)

实验七

一项涉及129名KT患者的前瞻性研究证实,通过神经网络将多个ABCB1多态性与CYP3A5基因型结合起来,可以更准确地计算出他克莫司的初始剂量,从而改善治疗并预防他克莫司毒性。

实验八

37名KT患者被随机分为低脂标准组和地中海饮食组。对于黄斑变性组,神经网络有两个隐藏层,分别有223个和2个神经元。在对照组中,网络也有两个隐藏层,分别有148个和2个神经元。结论是在不影响血脂水平的情况下,地中海饮食对移植后患者是理想的。该报告是唯一一项评估移植后不同类型饮食优势的研究。

处方

各种ML模型准确地预测他克莫司稳定的剂量,成功改善移植后的免疫抑制治疗和预防他克莫司毒性

ML可以评估移植手术后不同类型的饮食的优势,可以使KT对生命质量产生积极的影响

预测

人工智能被用于预测移植排斥反应、“移植延迟功能”和死亡率

人工智能算法可做作为移植前器官匹配工具,使得器官的分配更加合理,从整体优化KT医疗保健管理系统

挑战和需要更多人工智能研究的KT领域

预防性的人工智能工具可以用于识别移植物排斥和移植物丢失的可变危险因素,为病人提供成功率更高的KT

指导方针需要支持使用人工智能来分配器官或预测排斥反应

通过将人工智能整合到电子病人登记系统中,可以很容易地对人工智能对移植受者护理的影响进行前瞻性评估

我们期望在未来几年,所有肾移植程序将通过人工智能管理

透析服务管理,透析程序,贫血管理,激素/饮食问题,动静脉瘘(异常通道)评估

管理系统(ML用作移植前器官匹配工具),预测移植排斥反应,他克莫斯(肝肾移植的一线用药)治疗调节,饮食问题

透析服务管理,透析程序,贫血管理,激素/饮食问题,动静脉瘘(异常通道)评估

管理系统(ML用作移植前器官匹配工具),预测移植排斥反应,他克莫斯(肝肾移植的一线用药)治疗调节,饮食

Random walks

Graph auto encoders (GAE)

第一想法:传统自编码器,用隐藏向量还原原始数据,即训练目标为output拟合原始数据

进一步想法:变分自编码器,为每个样本构造专属的正态分布,然后采样获得隐藏向量来重构。隐藏向量的分布尽量能接近高斯分布,能够随机生成隐含变量喂给解码器,也提高了泛化能力。

但是,对于数据集和任务来说,完成任务所需要的特征并不一定要能完成图像重构。例如,辨别百元假钞不一定要能完整复刻出百元假钞。

互信息(MI, mutual information)

好特征的基本原则应当是**“能够从整个数据集中辨别出该样本出来”**,也就是说,提取出该样本(最)独特 的信息。

熵H(Y)与条件熵H(Y|X)之差称为互信息,决策树学习中的信息增益等价于训练数据集中类与特征的互信息。

互信息:变量间相互依赖性 的量度。不同于相关系数,互信息并不局限于实值随机变量。它能度量两个事件集合之间的相关性。

用 X 表示原始图像的集合,用 x∈X 表示某一原始图像。

Z 表示编码向量的集合,z∈Z 表示某个编码向量。

p(z|x) 表示 x 所产生的编码向量的分布,我们设它为高斯分布。这是我们要找的编码器 。

p̃(x) 原始数据的分布,p(z) 是在 p(z|x) 给定之后整个 Z 的分布

I(X, Z) = H(Z) - H(Z|X) :熵 H(Z) 看作一个随机变量不确定度的量度,那么 H(Z|X) 就是 X 没有涉及到的 Z 的部分的不确定度的量度。总的Z的不确定度,减去知道X而剩下的Y的不确定度,所以可以直观地理解互信息是Z变量提供给Y的信息量

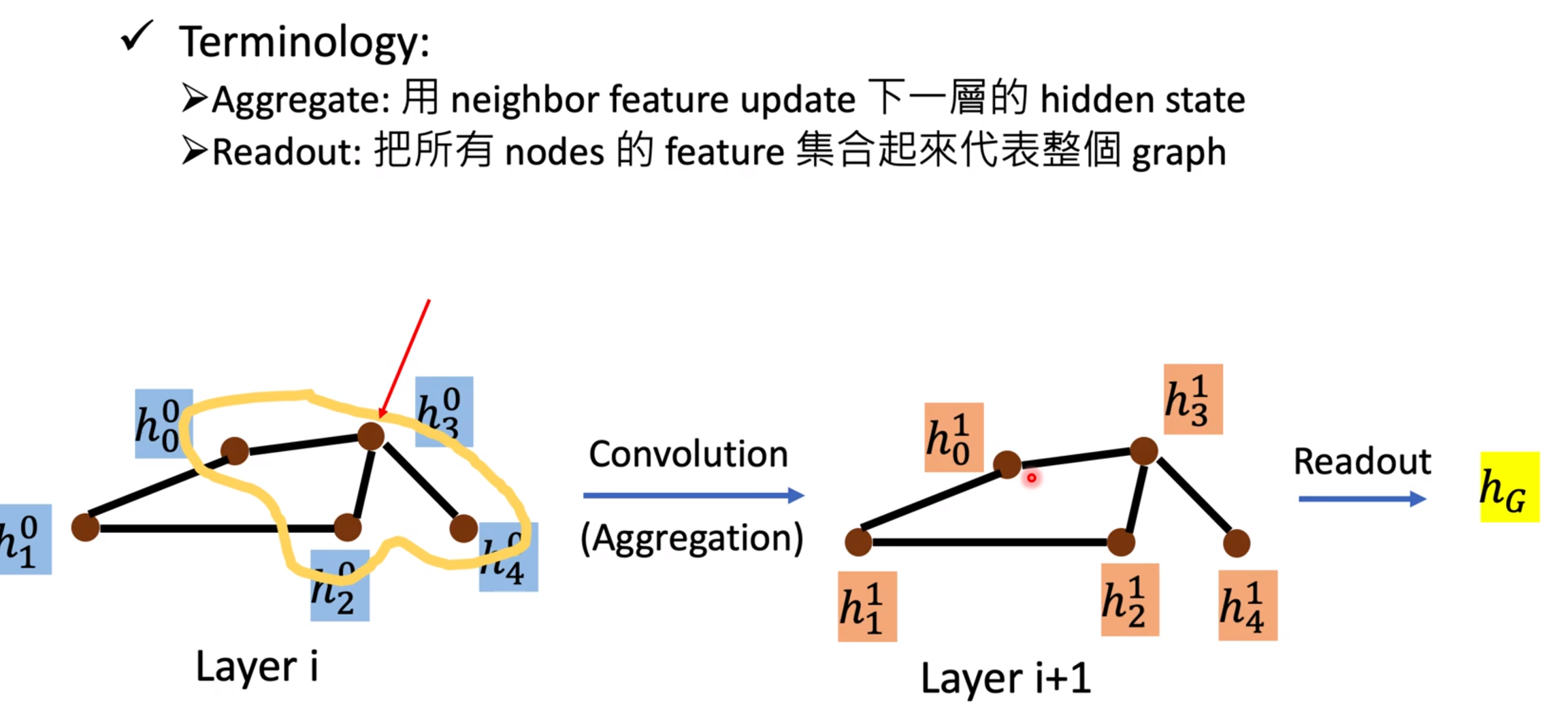

增强机制:对图的结构进行增广,然后对相同的节点进行子采样。类似于CV中的裁剪。

两个专用的GNN:即图编码器。对应原数据和增强后的数据。

使用GCN。σ(AXΘ) and σ(SXΘ), X为初始节点的特征,Θ为学习参数。

一个共享的MLP(靠左):用于学习图的节点表示 。具有两个隐藏层和PReLU激活函数。

一个图池化层P:即readout函数,而后传入共享MLP(结构同上)中,得出图表示 。

每个GCN层中的节点表示的总和,连接起来,然后将它们馈送到一个单层前馈网络。

鉴别器D:图的节点表示 和另一个图的图形表示 进行对比。并对它们的一致性进行评分。

Θ是权重系数,表示全局和局部信息的比例。所有θ之和为1。

heat kernel : 带入T = AD-1, θk = α(1-α)k。α是随机游走的传送概率,t是

Personalized PageRank : 带入T = D-1/2AD-1/2, θk = e-ttk/k!

从一个图中随机采样节点及边,然后再从另一个图(扩散后)中确定对应的节点和边。

扩充view的数量超过两个不会改善性能,最好的效果是邻接矩阵和传播矩阵 进行对比学习。

对比节点和视图的表示 达到更好的效果,优于,图-图表示对比学习,不同长度的编码对比学习。

**简单的图读出层(求和)**比differentiable pooling(DiffPool)效果更好。

预训练时候,应用正则化(提前停止除外)或规范化层会对性能产生负面影响。

Tensor

,去翻翻官方API就知道了,下表给了一些常用的作参考。

这些创建方法都可以在创建的时候指定数据类型dtype和存放device(cpu/gpu)。

另外,PyTorch还支持一些线性函数,这里提一下,免得用起来的时候自己造轮子,具体用法参考官方文档。如下表所示:

addmm/addbmm/addmv/addr/baddbmm..

当对两个形状不同的Tensor按元素运算时,可能会触发广播(broadcasting)机制:先适当复制元素使这两个Tensor形状相同后再按元素运算。

所有在CPU上的Tensor(除了CharTensor)都支持与NumPy数组相互转换。

Tensor是这个包的核心类,如果将其属性.requires_grad设置为True,它将开始追踪(track)在其上的所有操作(这样就可以利用链式法则进行梯度传播了)。完成计算后,可以调用.backward()来完成所有梯度计算。此Tensor的梯度将累积到.grad属性中。

注意在y.backward()时,如果y是标量,则不需要为backward()传入任何参数;否则,需要传入一个与y同形的Tensor。解释见 2.3.2 节。

如果不想要被继续追踪,可以调用.detach()将其从追踪记录中分离出来,这样就可以防止将来的计算被追踪,这样梯度就传不过去了。

可以用with torch.no_grad()将不想被追踪的操作代码块包裹起来,这种方法在评估模型的时候很常用,因为在评估模型时,我们并不需要计算可训练参数(requires_grad=True)的梯度。

Function是另外一个很重要的类。Tensor和Function互相结合就可以构建一个记录有整个计算过程的有向无环图(DAG)。

每个Tensor都有一个.grad_fn属性,该属性即创建该Tensor的Function, 就是说该Tensor是不是通过某些运算得到的,若是,则grad_fn返回一个与这些运算相关的对象,否则是None。

tensor.requires_grad 用于说明当前量是否需要在计算中保留对应的梯度信息

标量out,直接调用out.backward();不允许使用张量对张量求导,需要加权平均,例如out.backward(w);

如果我们想要修改tensor的数值,但是又不希望被autograd记录(即不会影响反向传播),那么我么可以对tensor.data进行操作。

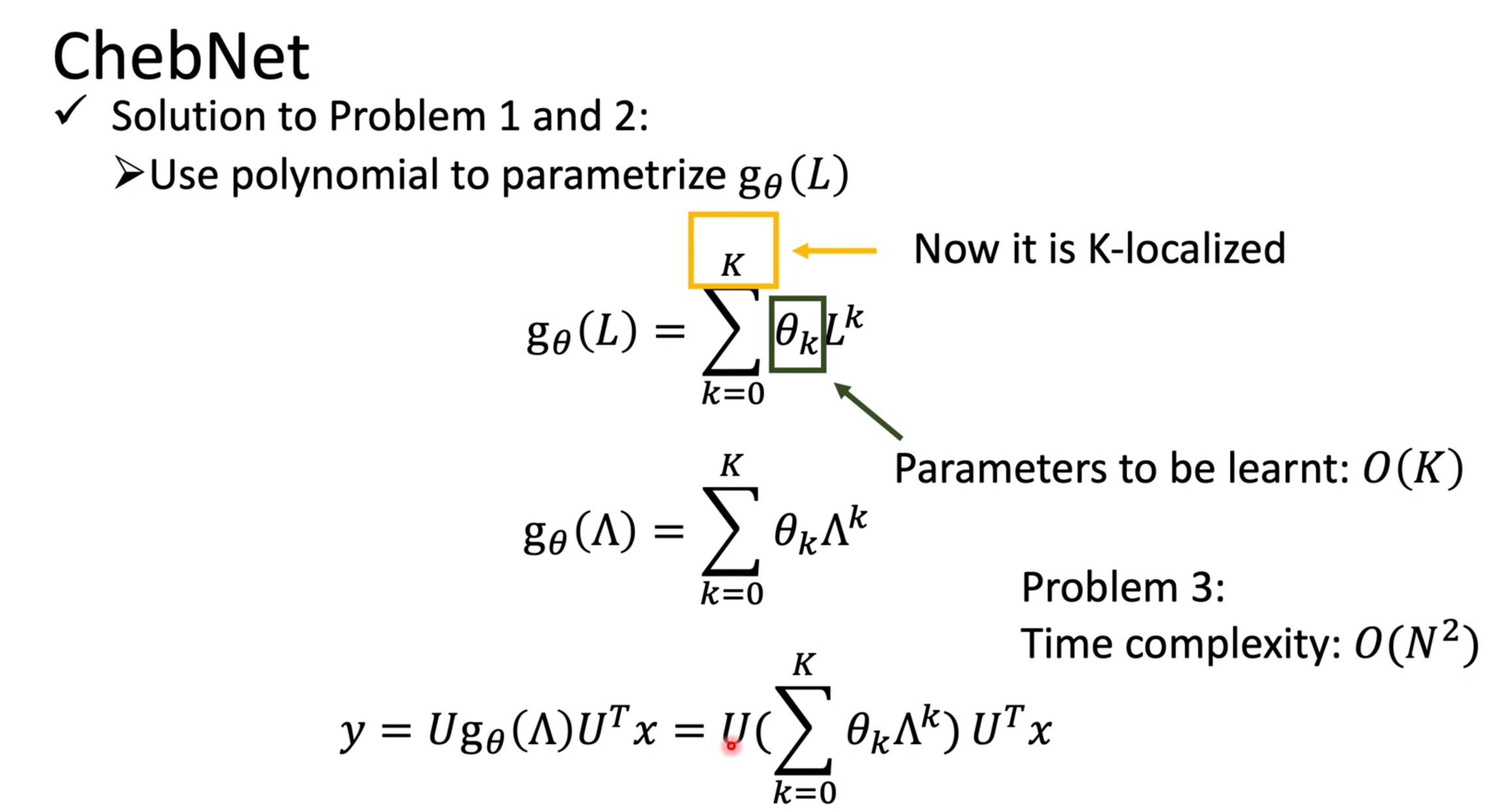

Fourier Domain(傅里叶变化) ; Spectral-based convolution

重点:GAT(graph attention network),GCN

L是半正定,特征值都是大于等于0。Lf中的第i个结果代表了第i点和相邻节点的差。

fTLf代表了两个点的能量差异,可以当作一种“能量”,“频率”来使用。

复制 import torch

import torchvision # 常用数据集包

import torchvision.transforms as transforms # 数据转归一化

复制 transform = transforms.Compose( # 自定义一个转换器,先变成张量,再归一化(每个通道的均值序列,标准差序列)

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]) # (0-0.5)/0.5 = -1

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True,

transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4, shuffle=True,

num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True,

transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=4, shuffle=False,

num_workers=2)

# num_workers根据计算机的CPU和内存来设置,充足可以设置多一些

# 设为0表示不用内存

classes = ('plane','car', 'bird','cat','deer', 'dog','frog','horse','ship','truck')

复制 Files already downloaded and verified

Files already downloaded and verified

《第四章》 定义:构成神经网络的基本单元,其主要是模拟生物神经元的结构和特性,接收一组输入信号并产出 输出 激活函数特定: (1)连续并可导(允许少数点上不可导)的非线性函数. 可导的激活函数可以直接利用数值优化 的方法来学习网络参数.

(2) 激活函数及其导函数要尽可能的简单 ,有利于提高网络计算效率.

(3) 激活函数的导函数的值域要在一个合适的区间 内, 不能太大也不能太小,否则会影响训练的效率和稳定性.

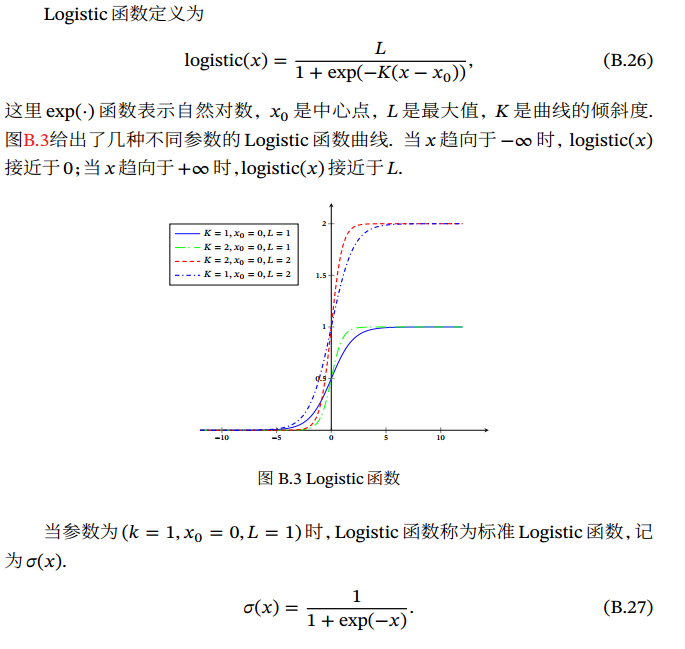

4.1.1 Sigmoid函数

Sigmoid型函数是指一类 S型曲线函数,为两端饱和函数. (𝑥 → −∞时,其导数 𝑓′(𝑥) → 0,则称其为左饱和)

常用的 Sigmoid型函数: Logistic函数 和 Tanh函数 .

Logistic: 𝜎(𝑥) = 1/[1 + exp(−𝑥)]理解:把一个实数域的输入“ 挤压” 到(0, 1). 当输入值在 0附近时,Sigmoid型函数近似为线性函数;当输入值靠近两端时,对输入进行抑制. 输入越小,越接近于 0;输入越大,越接近于 1. 特征: - 其输出直接可以看作是概率分布 - 其可以看作是一个软性门(Soft Gate),用来控制其他神经元输出信息的数量tanh(𝑥) = (exp(𝑥) − exp(−𝑥))/(exp(𝑥) + exp(−𝑥))tanh(𝑥) = 2𝜎(2𝑥) − 1

理解:看作是放大并平移的 Logistic函数,其值域是(−1,1)

4.1.1.1 Hard-Logistic函数和 Hard-Tanh函数

两个函数:

ReLU(Rectified Linear Unit,修正线性单元)

ReLU(𝑥) = max(0,𝑥)理解: ReLU 却具有很好的稀疏性, 大约50% 的神经元会处于激活状态.被认为有生物上的解释性,比如单侧抑制、宽兴奋边界(即兴奋程度也可以非常高). ReLU 函数为左饱和函数,且在𝑥 > 0时导数为 1,在一定程度上缓解了神经网络的梯度消失问题,加速梯度下降的收敛速度

缺点:死亡 ReLU 问题(Dying ReLU Problem),如果参数在一次不恰当的更新后,第一个隐藏层中的某个 ReLU神经元在所有的训练数据上都不能被激活, 那么这个神经元自身参数的梯度永远都会是 0,在以后的训练过程中永远不能被激活.

4.1.2.1 带泄漏的ReLU

LeakyReLU(𝑥) = max(0,𝑥) + 𝛾 min(0,𝑥)当𝛾 < 1时:LeakyReLU(𝑥) = max(𝑥,𝛾𝑥)

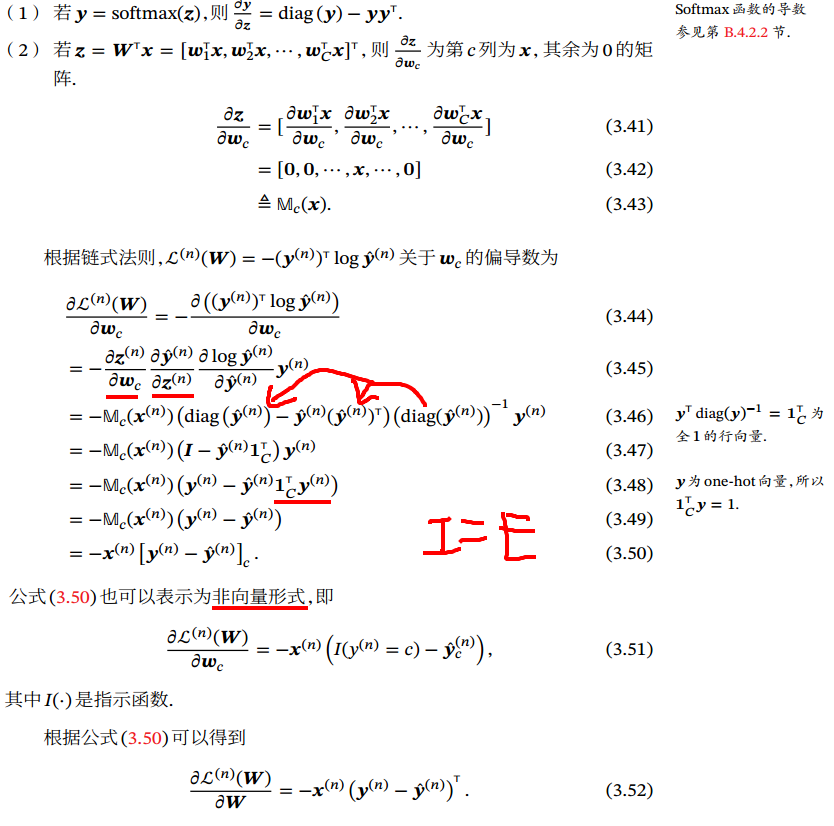

4.1.2.2 带参数的ReLU

PReLU𝑖(𝑥)= max(0, 𝑥) + 𝛾_𝑖 min(0, 𝑥)

𝛾_𝑖 为 𝑥 ≤ 0 时函数的斜率. 因此, PReLU 是非饱和函数. 如果 𝛾𝑖 = 0, 那么PReLU就退化为 ReLU. 如果 𝛾𝑖 为一个很小的常数,则 PReLU可以看作带泄露的ReLU. PReLU 可以允许不同神经元具有不同的参数 ,也可以一组神经元共享一个参数.

ELU(x) = = max(0, 𝑥) + min(0, 𝛾(exp(𝑥) − 1))

𝛾 ≥ 0是一个超参数,决定 𝑥 ≤ 0时的饱和曲线,并调整输出均值在 0附近

4.1.2.4 Softplus函数

Softplus(𝑥) = log(1 + exp(𝑥))

看作是 Rectifier 函数的平滑版本,其导数刚好是 Logistic函数. Softplus函数虽然也具有单侧抑制、宽兴奋边界的特性,却没有稀疏激活性.

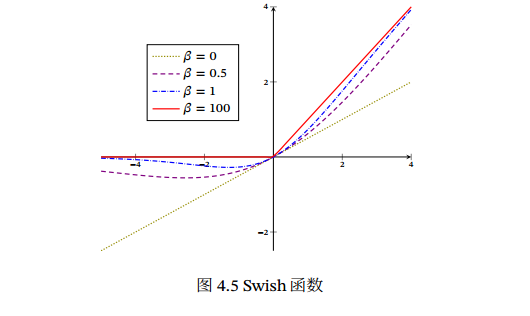

swish(𝑥) = 𝑥𝜎(𝛽𝑥)𝜎(⋅) 为 Logistic 函数, 𝛽 为可学习的参数或一个固定超参数. 𝜎(⋅)∈ (0, 1) 可以看作是一种软性的门控机制. 接近1为开,接近0为关.

当 𝛽 = 0时,Swish函数变成线性函数 𝑥/2.

当 𝛽 = 1时,Swish函数在 𝑥 > 0时近似线性, 在 𝑥 < 0 时近似饱和,同时具有一定的非单调性.

当 𝛽 → +∞ 时,𝜎(𝛽𝑥)趋向于离散的 0-1函数,Swish函数近似为 ReLU函数.

Swish函数可以看作是线性函数和 ReLU函数之间的非线性插值函数,其程度由参数 𝛽 控制

高斯误差线性单元(Gaussian Error Linear Unit,GELU),类似swish,用门控机制来调整输出值

GELU(𝑥) = 𝑥𝑃(𝑋 ≤ 𝑥)𝑃(𝑋 ≤ 𝑥)是高斯分布𝒩(𝜇,𝜎2)的累积分布函数,𝜇,𝜎为超参数,一般设 𝜇 = 0,𝜎 = 1即可.

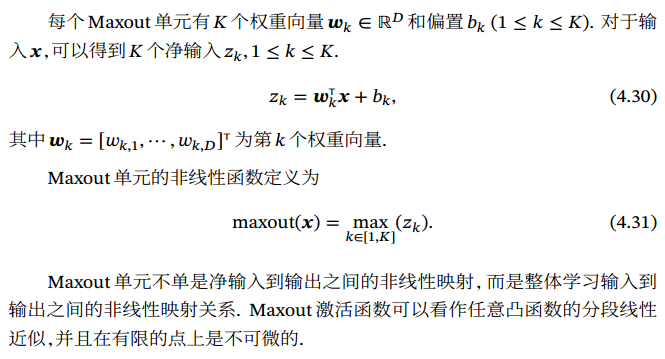

Maxout单元是一种分段线性函数.

Sigmoid 型函数、ReLU等激活函数的输入是神经元的净输入𝑧,是一个标量 .

Maxout单元的输入是上一层神经元的全部原始输出,是一个向量 𝒙 = [𝑥1;𝑥2;⋯;𝑥𝐷].

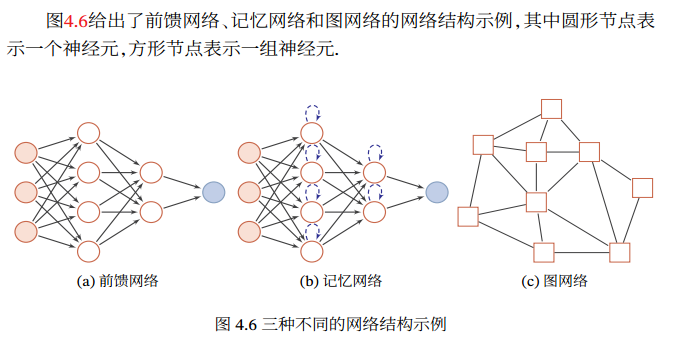

神经网络 :通过一定的连接方式或信息传递方式进行协作的神经元.

整个网络中的信息是朝一个方向传播,没有反向 的信息传播,可以用一个有向无环路图 表示.

前馈网络包括全连接前馈网络 和卷积神经网络 等.

前馈网络可看作一个函数,通过简单非线性函数的多次复合,实现输入空间到输出空间的复杂映射

记忆网络,也称为反馈网络 ,网络中的神经元不但可以接收其他神经元的信 息, 也可以接收自己的历史信息. 在不同的时刻具有不同的状态.

记忆神经网络中的信息传播可以是单向或双向传递,因此可用一个有向循环图或无向图 来表示. 为了增强记忆网络的记忆容量,可以引入外部记忆单元和读写机制,用来保存一些网络的中间状态, 称为记忆增强神经网络(Memory Augmented Neural Network,MANN)(第8.5节),比如神经图灵机和记忆网络记忆网络包括循环神经网络(第6章)、Hopfield网络(第8.6.1节)、玻尔兹曼机(第12.1节)、受限玻尔兹曼机(第12.2节)等.

记忆网络可以看作一个程序,具有更强的计算和记忆能力

实际应用中很多数据是图结构的数据,比如知识图谱、社交网络、分子(Molecular )网络等.

图网络是定义在图结构数据上的神经网络

图网络是前馈网络和记忆网络的泛化,包含很多不同的实现方式,比如图卷 积网络(Graph Convolutional Network, GCN)[Kipf et al., 2016]、 图注意力网络(Graph Attention Network,GAT)[Veličković et al., 2017]、消息传递神经网络(Message Passing Neural Network,MPNN)[Gilmer et al., 2017]等.

前馈神经网络(Feedforward Neural Network,FNN):多层的 Logistic回归模型(连续的非线性函数)组成.

第 0层称为输入层,最后一层称为输出层,其他中间层称为隐藏层.

第l-1层活性值(Activation):𝒂(𝑙−1)

第l层神经元的净活性值(Net Activation)𝒛(𝑙)

多层感知器(Multi-Layer Perceptron, MLP):由多层的感知器(不连续的非线性函数)组成.

通用近似定理 (Universal approximation theorem,一译万能逼近定理)」:如果一个前馈神经网络具有线性输出层和至少一层隐藏层,只要给予网络足够数量的神经元,便可以实现以足够高精度来逼近任意一个在 ℝn 的紧子集 (Compact subset) 上的连续函数。

对于二分类问题𝑦 ∈ {0,1}, 并采用 Logistic 回归, 那么 Logistic 回归分类器可以看成神经网络的最后一层. 也就是说,网络的最后一层只用一个神经元,并且激活函数为 Logistic函数. 网络的输出可以直接作为类别𝑦 = 1的后验概率.

𝑝(𝑦 = 1|𝒙) = 𝑎(𝐿),

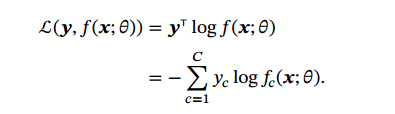

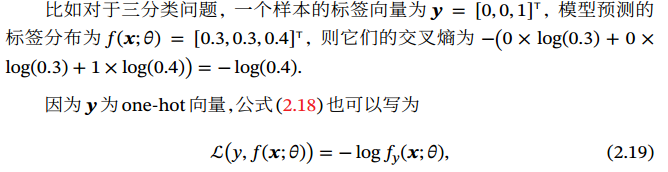

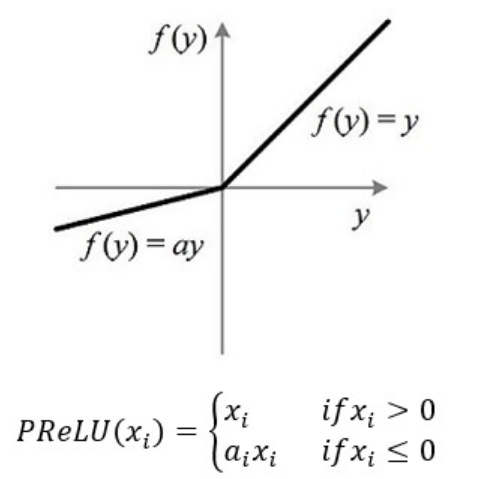

对于多分类问题,如果使用 Softmax 回归分类器,相当于网络最后一层设置𝐶 个神经元,其激活函数为 Softmax函数. 网络最后一层(第𝐿层)的输出可以作为每个类的后验概率.

̂y = softmax(𝒛(𝐿))

交叉熵损失函数:ℒ(𝒚,𝒚) = − ̂y^T log(𝒚).𝒚 ∈ {0,1}^𝐶 为标签𝑦 对应的 one-hot向量表示.

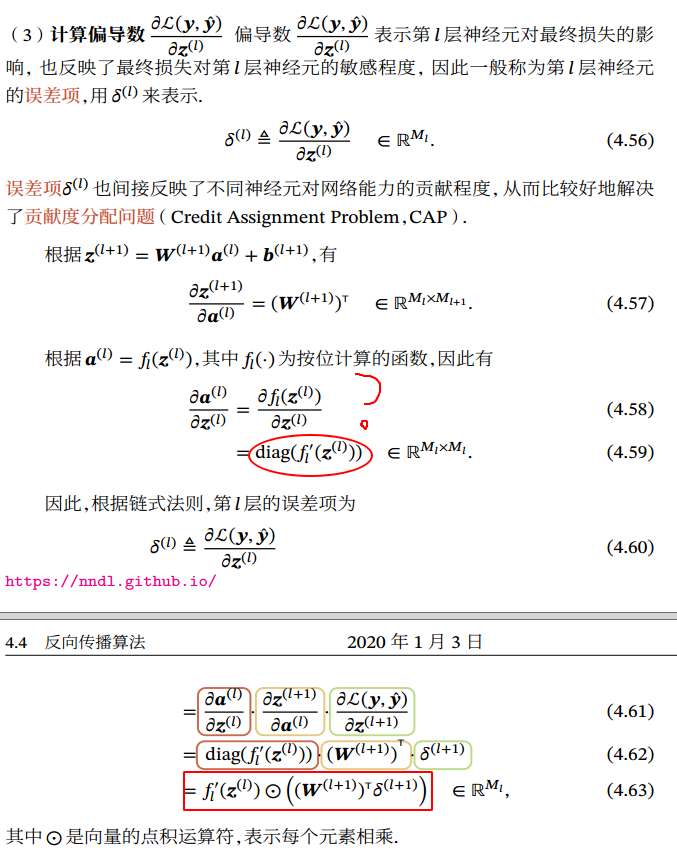

矩阵积分分三种形式: 反向传播算法 的含义是:第𝑙 层的一个神经元的误差项(或敏感性)是所有与该神经元相连的第 𝑙 + 1层的神经元的误差项的权重和. 然后,再乘上该神经元激活函数的梯度.

使用误差反向传播算法的前馈神经网络训练过程可以分为以下三步:

前馈计算每一层的净输入 𝒛(𝑙) 和激活值 𝒂(𝑙),直到最后一层;

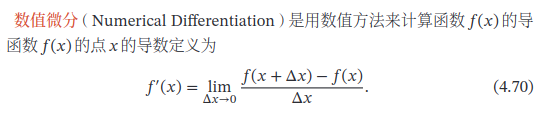

过小,会引起数值计算问题,比如舍入误差(Round-off Error)近似值和精确值的差异;

过大,会增加截断误差(Truncation Error)是指理论解和精确解之间的误差.

一种基于符号计算的自动求导方法.符号计算也叫代数计算. 例如x^2的导数是2x

缺点:

4.5.3 自动微分(Automatic Differentiation, AD)

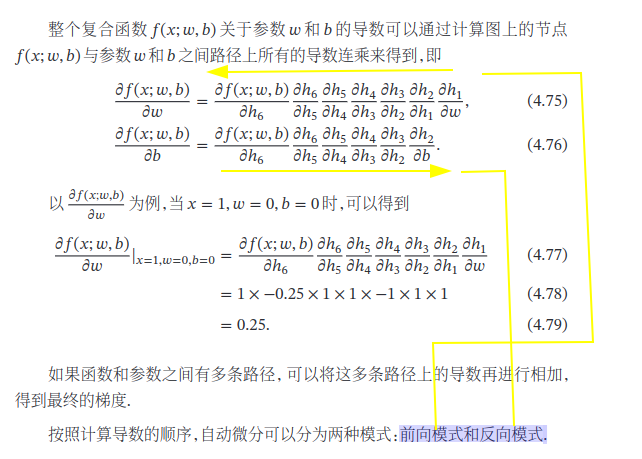

自动微分的处理对象是一个函数或一段程序. 所有的数值计算可以分解为一些基本操作,然后利用链式法则 来自动计算一个复合函数的梯度.

前向模式需要对每一个输入变量都进行一遍遍历,共需要𝑁遍.

反向模式需要对每一个输出都进行一个遍历,共需要𝑀遍.

风险函数为𝑓 ∶ ℝ^𝑁→ ℝ,输出为标量,因此采用反向模式为最有效的计算方式,只需要一遍计算

静态计算图 是在编译时构建计算图,计算图构建好之后在程序运行时不能改变,静态计算图在构建时可以进行优化,并行能力强,但灵活性比较差.

动态计算图 是在程序运行时动态构建.两种构建方式各有优缺点.动态计算图则不容易优化,当不同输入的网络结构不一致时,难以并行计算,但是灵活性比较高.

Theano和Tensorflow1.x采用的是静态计算图,Tensorflow 2.0也支持了动态计算图.而DyNet、Chainer和PyTorch采用的是动态计算

神经网络的优化问题是一个非凸优化问题.(局部最小值不是全局最小值)

Logistic函数导数 :𝜎′(𝑥) = 𝜎(𝑥)(1 − 𝜎(𝑥))∈ [0,0.25]

Tanh函数导数 :tanh′(𝑥) = 1 −(tanh(𝑥))^2 ∈ [0,1]

梯度消失问题(Vanishing Gradient Problem):当网络层数增多时,梯度会不断衰减,直至消失.

解决方法:

《第一章》《第二章》 研究如何使计算机系统利用经验改善性能。

关注如何自动找出表示数据的合适方式,以便更好地将输入变换为正确的输出。

具有多级表示的表征学习方法。在每一级(从原始数据开始),深度学习通过简单的函数将该级的表示变换为更高级的表示。因此,深度学习模型也可以看作是由许多简单函数复合而成的函数。当这些复合的函数足够多时,深度学习模型就可以表达非常复杂的变换。

端到端的训练——将整个系统组建好之后一起训练。(整个模型一起训练,调整)

从含参数统计模型转向完全无参数的模型——当数据非常稀缺时,我们需要通过简化对现实的假设来得到实用的模型。当数据充足时,我们就可以用能更好地拟合现实的无参数模型来替代这些含参数模型。(像人类一样,有经验根据经验判断,没经验用直觉)

对非最优解的包容、对非凸非线性优化的使用,以及勇于尝试没有被证明过的方法。(某种意义上的直觉)

踩了个大坑,不要直接下载NVIDIA官网的cuda11.0,找不到mxnet-cu110的包。

如果下载mxnet-cu101,也需要下载cuda10.1版本,才能import mxnet。

在import mxnet的时候发现了dll文件找不到,下载了最新的vc和一个dependences walker软件查看dll。而后重启jupyter内核,莫名奇妙解决了,至今不知问题在哪。

arange(n)——[0,1...,n],理解为a指一维,range指范围

shape——数组的形状,即每个维度的长度所组合成的元组,(3,4)表示三行四列

运行本节中的代码。将本节中条件判别式X == Y改为X < Y或X > Y,看看能够得到什么样的NDArray。

答:逐个判断元素地比较大小

《第二章》 模式识别 ( Pattern Recognition,PR):在早期的工程领域, 机器学习也经常称为模式识别, 但模式识别更偏向于具体的应用任务, 比如光学字符识别、 语音识别、 人脸识别等.

线性,非线性

训练集应当是独立同分布 (Identically and Independently Distributed,IID)的样本组成. 样本分布应当是固定的.

经验风险最小化 (Empirical Risk Minimization,ERM)准则:调整不同的参数θ,找到最小的平均损失.

结构风险最小化 (Structure Risk Minimization,SRM)准则:在经验风险最小化的基础上添加了正则化(Regularization) 正则化 (Regularization)理解:给损失函数添加上一个公式(通常用2-范数,等价于权重衰减),来限制损失函数的变化,从而不容易达到过拟合.

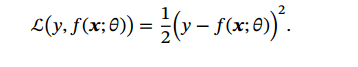

常用损失函数 :

平方损失函数,一般不适用于分类问题,用于预测标签y为实数值的任务

交叉熵损失函数,一般用于分类问题(不仅二分类)

常见的超参数 包括: 聚类算法中的类别个数、 梯度下降法中的步长、 正则化项的系数、神经网络的层数、支持向量机中的核函数等.

提前停止 (Early Stop):如果在验证集上的错误率不再下降,就停止迭代. 可以防止过拟合.

随机梯度下降法 (Stochastic Gradient Descent,SGD):在每次迭代时只采集一个样本, 计算这个样本损失函数的梯度并更新参数.

第 𝑡 次迭代时,随机选取一个包含 𝐾(2的n次方计算效率高) 个样本的子集 𝒮𝑡,计算这个子集上每个样本损失函数的梯度并进行平均,然后再进行参数更新:

批量梯度下降和随机梯度下降之间的区别 :在于每次迭代的优化目标是对所有样本的平均损失函数还是单个样本的损失函数.

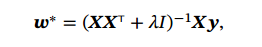

共线性 (collinearity):一个特征可以通过其他特征的线性组合来被准确的预测.

岭回归 (Ridge Regression):给XX^T的对角线元素都加上一个常数𝜆使得 (𝑿𝑿^T + 𝜆𝐼)满秩.

岭回归的解:相当于结构风险最小化准则下的最小二乘法估计,其目标函数是: 最小二乘法 (Least Square Method, LSM):

机器学习任务分为两类:

1.样本的特征向量x和标签y之间存在未知的函数关系.

2.条件概率p(y|x)服从某个未知分布.

最大似然估计 (Maximum Likelihood Estimation,MLE):找到一组参数w使得似然函数p(y|X;w,𝜎)最大,等价于它的对数似然函数 log p(y|X; w, 𝜎) 最大.

贝叶斯估计 (Bayesian Estimation): 一种估计 参数w的后验概率分布的方法,后验 = 先验 × 似然率;p(𝒚|𝑿; 𝒘, 𝜎)为 𝒘的似然函数,p(𝒘; 𝜈)为 𝒘的先验.

最大后验估计 (Maximum A Posteriori Estimation,MAP):最优参数为后验分布中概率密度最高的参数.

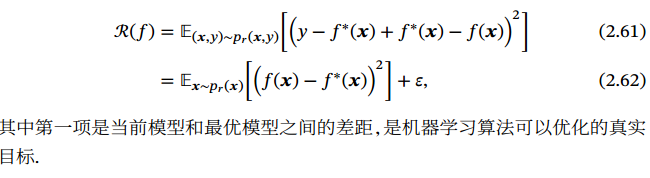

期望错误:理解为错误的平均值

按函数 𝑓(𝒙; 𝜃)的不同,机器学习算法可以分为线性模型和非线性模型;

按照学习准则的不同,机器学习算法也可以分为统计方法和非统计方法. (最常用)按照训练样本提供的信息以及反馈方式的不同,将机器学习算法分为以下几类:

监督学习(Supervised Learning,SL):机器学习的目标是通过建模样本的特征 𝒙 和标签 𝑦 之间的关系𝑦 = 𝑓(𝒙; 𝜃) 或 𝑝(𝑦|𝒙; 𝜃),并且训练集中每个样本都有标签

回归(Regression):标签 𝑦 是连续值(实数或连续整数), 𝑓(𝒙; 𝜃)的输出也是连续值

分类(Classification):标签 𝑦 是离散的类别(符号). 在分类问题中, 学习到的模型也称为分类器(Classifier). 分类问题根据其类别数量又可分为二分类(Binary Classification)和多分类(Multi-class Classification)问题.

图像特征 :将N * M的图像的所有像素存储为N*M向量,也会经常加入一个额外的特征,比如直方图、宽高比、笔画数、纹理特征、边缘特征等.

文字特征 :词袋(Bag-of-Words,BoW)模型,类似one-hot向量.还可以改进为二元特征,即两个词的组合.

特征学习 (Feature Learning), 也叫表示学习(Representation Learning):如何让机器自动地学习出有效的特征.

特征选择 (Feature Selection):是选取原始特征集合的一个有效子集, 使得 基于这个特征子集训练出来的模型准确率最高.

子集搜索(Subset Search):假设原始特征数为 𝐷, 则共有 2^𝐷 个候选子集. 特征选择的目标是选择一个最优的候选子集.常用的方法是采用贪心的策略: 由空集合开始, 每一轮添加该轮最优的特征,称为前向搜索(Forward Search);或者从原始特征集合开始,每次删除最无用的特征,称为反向搜索(Backward Search).

过滤式方法(Filter Method)不依赖具体的机器学习模型. 每次增加最有信 息量的特征,或删除最没有信息量的特征. 信息量可以通过信息增益(Information Gain)衡量.

包裹式方法(Wrapper Method)是用后续机器学习模型的准确率来评价一 个特征子集. 每次增加对后续机器学习模型最有用的特征,或删除对后续机器学 习任务最无用的特征.将机器学习模型包裹到特征选择过程的内部.

准确率:预测准确的概率.

微平均 是每一个样本的性能指标的算术平均值 . 对于单个样本而言,它的精确率和召回率是相同的(要么都是 1,要么都是 0). 因此精确率的微平均和召回率的微平均是相同的. 同理,F1值的微平均指标是相同的. 当不同类别的样本数量不均衡时,使用宏平均会比微平均更合理些.

实际使用的评估方式:AUC(Area Under Curve)、 ROC(Receiver Operating Characteristic)曲线、PR(Precision-Recall)曲线

ROC曲线:

纵轴是真正例率 (True Positive Rate, 简称TPR,也是召回率): 所有实际为正例中,真的正例的比率.

横轴是假正例率 (False Positive Rate,简称FPR):所有实际为负例中,预测为正例的比率.

ROC曲线作用:

ROC曲线能很容易的查出任意阈值对学习器的泛化性能影响。

有助于选择最佳的阈值。ROC曲线越靠近左上角,模型的查全率就越高。最靠近左上角的ROC曲线上的点是分类错误最少的最好阈值,其假正例和假反例总数最少。

可以对不同的学习器比较性能。将各个学习器的ROC曲线绘制到同一坐标中,直观地鉴别优劣,靠近左上角的ROC曲所代表的学习器准确性最高。

AUC面积作用:衡量二分类模型优劣的一种评价指标,表示预测的正例排在负例前面的概率。

交叉验证 (Cross Validation):把原始数据集平均分为 𝐾 组不重复的子集,每次选 𝐾 − 1组子集作 (𝐾 一般大于 3,可以选10).为训练集,剩下的一组子集作为验证集. 这样可以进行 𝐾 次试验并得到 𝐾 个模型,将这𝐾 个模型在各自验证集上的错误率的平均作为分类器的评价.

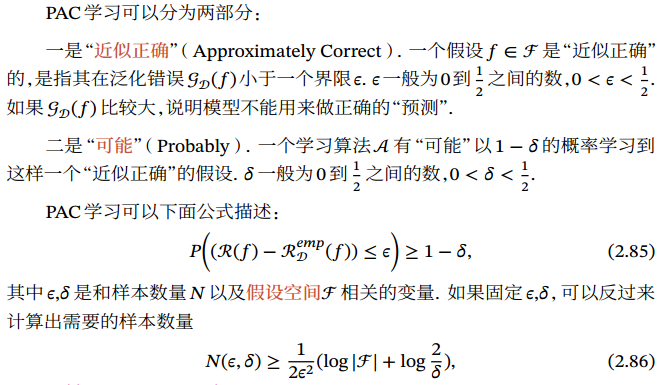

可能近似正确 (Probably Approximately Correct,PAC)学习理论:PAC可学习指该学习算法能够在多项式时间内从合理数量的训练数据 中学习到一个近似正确的;𝑓(𝒙)模型越复杂,需要样本越多;

没有免费的午餐 (No Free Lunch Theorem,NFL):如果一个算法 对某些问题有效,那么它一定在另外一些问题上比纯随机搜索算法更差. 也就是 说,不能脱离具体问题来谈论算法的优劣.

丑小鸭定理 (Ugly Duckling Theorem):“ 丑小鸭与白天鹅之间的区别和两只白天鹅之间的区别一样大”. 世界上不存在分类的客观标准,一切分类的标准都是主观的。

奥卡姆剃刀原理 (Occam’s Razor):“ 如无必要,勿增实体”.简单的模型泛化能力更好. 如果有两个性能相近的模型,我们应该选择更简单的模型. 因此,在机器学习的学习准则上,我们经常会引入参数正则化来限制模型能力,避免过拟合.

奥卡姆剃刀的一种形式化是最小描述长度(Minimum Description Length, MDL)原则:即对一个数据集 𝒟,最好的模型 𝑓 ∈ ℱ 是会使得数据集的压缩效果最好,即编码长度最小.

归纳偏置 (Inductive Bias):经常会对学习的问题做一些假设,在贝叶斯学习中也经常称为先验(Prior).在最近邻分类器中,我们会假设在特征空间中,一个小的局部区域中的大部分样本都同属一类. 在朴素贝叶斯分类器中,我们会假设每个特征的条件概率是互相独立的.

《第七章》 优化:优化算法的目标函数通常是一个基于训练数据集的损失函数,优化的目标在于降低训练误差。

深度学习:深度学习的目标在于降低泛化误差。为了降低泛化误差,除了使用优化算法降低训练误差以外,还需要注意应对过拟合。

梯度接近0时,是为局部最优点或鞍点:由于学习模型参数维度通常是高维,所以鞍点比局部最小值更常见。

《第三章》 《第三章》

线性回归模型 的房屋价格预测表达式为:

假设我们采集的样本数为n,索引为i的样本的特征为x(i)1 和x(i)2 ,标签为y(i) 。对于索引为i的房屋,w指权重(weight),b是偏差(bias),y帽是预估。

平方损失函数

《第六章》 语言模型 (language model)是自然语言处理的重要技术。自然语言处理中最常见的数据是文本数据。我们可以把一段自然语言文本看作一段离散的时间序列。

可以计算为w1,w2两词相邻的频率与w1词频的比值,因为该比值即P(w1,w2)与P(w1)之比

P(w3∣w1,w2)同理可以计算为w1、w2和w3这3个词相邻的频率与w1和w2这2个词相邻的频率的比值。

复制 torch.empty(5, 3)

torch.rand(5, 3)

torch.zeros(5, 3, dtype=torch.long)

torch.tensor([5.5, 3]) # 根据数组创建

# 获取形状

print(x.size())

print(x.shape)

复制 x + y

torch.add(x, y)

y.add_(x) # 就地加法,类似x.copy_(y), x.t_()

复制 # 不同的size,但是是共享data

y = x.view(15)

z = x.view(-1, 5) # -1所指的维度可以根据其他维度的值推出来

print(x.size(), y.size(), z.size())

# torch.Size([5, 3]) torch.Size([15]) torch.Size([3, 5])

# 不同size,不同data的新副本

x_cp = x.clone().view(15) # clone会被记录在计算图中,即梯度传播影响源数据

# reshape() 不保证返回的一定是拷贝后的数据

复制 x = torch.randn(1)

print(x)

print(x.item())

# tensor([2.3466])

# 2.3466382026672363

复制 y[:] = y + x # 就地

y = y + x # 非就地

# view只是共享了tensor的数据,二者id(内存地址)并不一致。

# 因为tensor里面还有除了data的数据

复制 # tensor to numpy

a = torch.ones(5)

b = a.numpy()

复制 # numpy to tensor

import numpy as np

a = np.ones(5)

b = torch.from_numpy(a)

# torch.tensor()将NumPy数组转换成Tensor

# 该方法总是会进行数据拷贝

c = torch.tensor(a)

复制 import matplotlib.pyplot as plt

import numpy as np

# 展示图片

def imshow(img):

img = img / 2 + 0.5 # 逆归一化

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

# 获取一些图片

dataiter = iter(trainloader)

images, labels = dataiter.next()

print(images.size())

imshow(torchvision.utils.make_grid(images))

print(' '.join('%5s' % classes[labels[j]] for j in range(4)))

复制 torch.Size([4, 3, 32, 32])

复制 import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

net = Net()

print(net)

复制 Net(

(conv1): Conv2d(3, 6, kernel_size=(5, 5), stride=(1, 1))

(pool): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv2): Conv2d(6, 16, kernel_size=(5, 5), stride=(1, 1))

(fc1): Linear(in_features=400, out_features=120, bias=True)

(fc2): Linear(in_features=120, out_features=84, bias=True)

(fc3): Linear(in_features=84, out_features=10, bias=True)

)

复制 import torch.optim as optim

criterion = nn.CrossEntropyLoss() # 交叉熵

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9) # 设置了动量的SGD

复制 for epoch in range(2):

running_loss = 0.0

for i, data in enumerate(trainloader, 0): # 从下标0开始迭代

inputs, labels = data # 读取数据

optimizer.zero_grad() # 初始梯度

outputs = net(inputs) # 前向传播

loss = criterion(outputs, labels) # 计算交叉熵

loss.backward() # 反向传播

optimizer.step() # 优化算法,学习参数

running_loss += loss.item()

if i % 2000 == 1999:

print('[%d, %5d] loss: %.3f' %

(epoch + 1, i + 1, running_loss / 2000))

running_loss = 0.0

print('Finished Training')

复制 [1, 2000] loss: 2.193

[1, 4000] loss: 1.864

[1, 6000] loss: 1.660

[1, 8000] loss: 1.559

[1, 10000] loss: 1.514

[1, 12000] loss: 1.468

[2, 2000] loss: 1.384

[2, 4000] loss: 1.356

[2, 6000] loss: 1.325

[2, 8000] loss: 1.317

[2, 10000] loss: 1.295

[2, 12000] loss: 1.271

Finished Training

复制 PATH = './cifar_net.pth'

torch.save(net.state_dict(), PATH) # 保存字典到指定文件

复制 dataiter = iter(testloader)

images, labels = dataiter.next()

imshow(torchvision.utils.make_grid(images))

print(' '.join('%5s' % classes[labels[j]] for j in range(4)))

复制 net = Net()

net.load_state_dict(torch.load(PATH))# 先加载数据,再将数据加载到net里,非必须

复制 <All keys matched successfully>

复制 outputs = net(images) # 使用训练后的数据,进行预测输出

_, predicted = torch.max(outputs, 1) # torch.max(data, dim)获取dim维向量的最大值,返回value,index

print('Predicted: ', ' '.join('%5s' % classes[predicted[j]]

for j in range(4)))

复制 Predicted: cat car ship plane

复制 correct = 0

total = 0

with torch.no_grad():

for data in testloader:

images, labels = data

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%' % (

100 * correct / total))

复制 Accuracy of the network on the 10000 test images: 54 %

复制 class_correct = list(0. for i in range(10)) # 10个 0.

class_total = list(0. for i in range(10))

with torch.no_grad():

for data in testloader:

images,labels = data

outputs = net(images)

_, predicted = torch.max(outputs, 1)

c = (predicted==labels).squeeze() # 将结果压缩一个维度

for i in range(4): # 批量为4

label = labels[i]

class_correct[label] += c[i].item()

class_total[label] += 1

for i in range(10):

print('Accuracy of %5s : %2d %%' % (

classes[i], 100 * class_correct[i] / class_total[i]))

复制 Accuracy of plane : 57 %

Accuracy of car : 52 %

Accuracy of bird : 41 %

Accuracy of cat : 44 %

Accuracy of deer : 27 %

Accuracy of dog : 40 %

Accuracy of frog : 69 %

Accuracy of horse : 56 %

Accuracy of ship : 77 %

Accuracy of truck : 75 %

复制 device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

复制 net.to(device) # 所有的模块和参数都会递归的变成CUDA张量

inputs, labels = data[0].to(device), data[1].to(device) # 所有数据都需要在GPU normal(mean,std)/uniform(from,to)

Leetcode 根据不同题目的标签,先从简单题刷起,最开始尽可能做,后面可能速度降下来。

可以的话每天三题左右。

能翻墙就翻墙吧,然后不要乱配置源,保持原始的就可以了,有些包在国内版本比较低,有冲突。

学会使用conda管理包,以及查看下载包时候出现的异常,尤其是标红的,很重要,不要轻易忽略,不然就是下一个坑。

jupyter lab也挺好用的,但是第一次使用,由于各个包版本没有搞好,导致404无法连接服务器,也就无法运行python,原因如第4点所说。

吐槽一下,在使用jupyter时候,好像不能同时打开同一个虚拟环境的另一个命令行,也就难以同时进行包管理。或者是我打开方式不对?

size——元素总数

zeros默认置零,ones默认置1,记得因为复数,有s

array——多维数组最普通的定义方式,array()括号里的即为数组

nd.random.normal(a,b,shape=(3,4))——random指随机,normal指正态分布,a-b范围

+-*/,还有==,都是逐个元素进行的。exp(),dot()也是,其中dot记得通过X.T转置

concat(X,Y,dim=0),将第0个维度(shape中的第0个元素)保持不变,其他结合起来。

sum()——获得所有元素的和,list内一个浮点数

norm()——逐个元素的平方之和后开根号=欧几里得范数(长度)=L2范数(长度),是list

asscalar()——as scalar,作为标量,即list-->num

broadcasting,广播机制——犹如C语言中的类型自动转换,将多维数组的长度扩大到可以进行运算,以保证运算的正确性。经测试发现,只有一行或者一列才可以广播,此时无歧义。

内存不变的操作:Z[:] = X + Y;nd.elemwise_add(X, Y, out=Z);X += Y; X[:] = X + Y

Y = Y + X; 会新开内存Y和临时内存X+Y

nd.array(P)——转换numpy-->NDArray

D.asnumpy()——转换NDArray-->numpy

替换成其他形状,结果是否和预期一样?

复制 from mxnet import autograd, nd

x = nd.arange(4).reshape((4, 1))

# 申请梯度的内存

x.attach_grad()

# 在运算的过程中记录梯度,此时从预测模式转为训练模式

with autograd.record():

y = 2 * nd.dot(x.T, x)

# 求梯度,y如果不是标量会求和

y.backward()

似然函数理解:它是给定联合样本值x下关于(未知)参数θ的函数,也就是将x作为常数,关于参数的函数.

Hinge损失函数,适用于二分类,y为-1或1.

符号定义 : [ x ] + = m a x ( 0 , x ) 符号定义:[x]_+=max(0,x) 符号定义 : [ x ] + = ma x ( 0 , x )

结构化学习(Structured Learning):问题的输出 𝒚通常是结构化的对象,比如序列、树或图等.

无监督学习(Unsupervised Learning,UL):指从不包含目标标签的训练样本中自动学习到一些有价值的信息. 典型的无监督学习问题有聚类、密度估计、特征学习、降维等

强化学习(Reinforcement Learning, RL)是一类通过交互来学习的机器学习算法. 在强化学习中,智能体根据环境的状态做出一个动作,并得到即时或延时的奖励. 智能体在和环境的交互中不断学习并调整策略,以取得最大化的期望总回报.

ℓ1 正则化: 通过 ℓ1 正则化来实现特征选择. 由于 ℓ1 正则化会导致稀疏特征,因此间接实现了特征选择. 特征抽取 (Feature Extraction):可以分为监督和无监督的方法. 监督的特征学习的目标是抽取对一个特定的预测任务最有用的特征,比如线性判别分析(Linear Discriminant Analysis,LDA). 而无监督的特征学习和具体任务无关,其目标通常是减少冗余信息和噪声,比如主成分分析(Principal Component Analysis,PCA). 维度灾难 (Curse of Dimensionality). 深度学习(Deep Learning, DL):如果我们将特征的表示学习和机器学习的预测学习有机地统一到一个模型中,建立一个端到端的学习算法,就可以有效地避免它们之间准则的不一致性. 目前比较有效的模型是神经网络,即将最后的输出层作为预测学习,其他层作为表示学习.

目标函数 = ∑ (观测值 − 理论值 ) 2 目标函数 = \sum\limits(观测值-理论值)^2 目标函数 = ∑ (观测值 − 理论值 ) 2

卷积运算:与互相关运算类似。为了得到卷积运算的输出,我们只需将核数组左右翻转并上下翻转,再与输入数组做互相关运算。可见,卷积运算和互相关运算虽然类似,但如果它们使用相同的核数组,对于同一个输入,输出往往并不相同。

特征图(feature map):二维卷积层输出的二维数组可以看作输入在空间维度(宽和高)上某一级的表征。

x的感受野(receptive field):影响元素x的前向计算的所有可能输入区域(可能大于输入的实际尺寸)。

corr2d因为用了[i,j]=导致自动求导失败,这是由于autograd目前还有局限性。在该类的forward函数里,将corr2d函数替换成nd.Convolution类使得自动求梯度变得可行。

构造一个输入图像X,令它有水平方向的边缘。如何设计卷积核K来检测图像中水平边缘?如果是对角方向的边缘呢?

答:检测水平边缘的核函数应该是[[1],[-1]];对角方向的核函数[1, -1]或[[1],[-1]]或[[1, 0],[-1, 0]];

对角方向的边缘监测方法有按水平的,按垂直的,或者都要。根据不同的方法,宽高会发生相应变化。

试着对我们自己构造的Conv2D类进行自动求梯度,会有什么样的错误信息?在该类的forward函数里,将corr2d函数替换成nd.Convolution类使得自动求梯度变得可行。

答:infershape[attrs.op](attrs, &in_shapes, &out_shapes)

corr2d因为用了[i,j]=导致自动求导失败,这是由于autograd目前还有局限性。改成 nd.Convolution 会没问题,因为它手动实现了backward函数。

如何通过变化输入和核数组将互相关运算表示成一个矩阵乘法?

答:卷积变成矩阵乘法,从乘法和加法的运算次数上看,两者没什么差别,但是转化成矩阵后,运算时需要的数据被存在连续的内存上,这样访问速度大大提升。

方法参考如下:https://www.cnblogs.com/marsggbo/p/12074297.html

如何构造一个全连接层来进行物体边缘检测?

答:全连接层是指使用nn.Dense?

填充(padding)是指在输入高和宽的两侧填充元素(通常是0元素)。

在输入的高和宽两侧分别填充了0元素的二维互相关计算 步幅(stride):我们将每次滑动的行数和列数。输出第一列第二个元素时,卷积窗口向下滑动了3行,而在输出第一行第二个元素时卷积窗口向右滑动了2列。当卷积窗口在输入上再向右滑动2列时,由于输入元素无法填满窗口,无结果输出。

对本节最后一个例子通过形状计算公式来计算输出形状,看看是否和实验结果一致。

一致,分别是8/3和8/4的下取整。

通道(channel)维:例如,彩色图像在高和宽2个维度外还有RGB(红、绿、蓝)3个颜色通道。称其通道维为3.

多输出通道:每个输出通道上的结果由卷积核在该输出通道上的核数组 与整个输入数组 计算而来。

卷积窗口形状为1×1(k_h=k_w=1)的多通道卷积层。

假设我们将通道维当作特征维,将高和宽维度上的元素当成数据样本,那么1×1卷积层的作用与全连接层等价。

假设输入形状为ci×h×w,且使用形状为co×ci×kh×kw、填充为(ph,pw)、步幅为(sh,sw)的卷积核。那么这个卷积层的前向计算分别需要多少次乘法和加法?

答:

输出形状为:

c o × ⌊ ( n h − k h + p h + s h ) / s h ⌋ × ⌊ ( n w − k w + p w + s w ) / s w ⌋ c_o\times\lfloor(n_h-k_h+p_h+s_h)/s_h\rfloor \times \lfloor(n_w-k_w+p_w+s_w)/s_w\rfloor c o × ⌊( n h − k h + p h + s h ) / s h ⌋ × ⌊( n w − k w + p w + s w ) / s w ⌋ 乘法次数:

k h × k w × c o × ⌊ ( n h − k h + p h + s h ) / s h ⌋ × ⌊ ( n w − k w + p w + s w ) / s w ⌋ k_h\times k_w \times c_o\times\lfloor(n_h-k_h+p_h+s_h)/s_h\rfloor \times \lfloor(n_w-k_w+p_w+s_w)/s_w\rfloor k h × k w × c o × ⌊( n h − k h + p h + s h ) / s h ⌋ × ⌊( n w − k w + p w + s w ) / s w ⌋ 加法次数:

[ ( k h × k w ) 个数连加 ] × ( c i 个数连加 ) × ⌊ ( n h − k h + p h + s h ) / s h ⌋ × ⌊ ( n w − k w + p w + s w ) / s w ⌋ [(k_h\times k_w)个数连加]\times(c_i个数连加)\times\lfloor(n_h-k_h+p_h+s_h)/s_h\rfloor \times \lfloor(n_w-k_w+p_w+s_w)/s_w\rfloor [( k h × k w ) 个数连加 ] × ( c i 个数连加 ) × ⌊( n h − k h + p h + s h ) / s h ⌋ × ⌊( n w − k w + p w + s w ) / s w ⌋ 翻倍输入通道数ci和输出通道数co会增加多少倍计算?翻倍填充呢?

答:通过上述公式可以看出,翻倍导致增加的计算程度大致为:c_o > c_i > p_h(p_w)

如果卷积核的高和宽kh=kw=1,能减少多少计算?

答:乘法降低至1/(k_h乘k_w),加法降低至1/(k_w*k_h-1)

本节最后一个例子中的变量Y1和Y2完全一致吗?原因是什么?

答:不完全一致,一个是通过矩阵乘法的方式、一个是通过互相关计算。某个地方的精度不一样,比如说没有批量求和。

当卷积窗口不为1×1时,如何用矩阵乘法实现卷积计算?

答:将卷积窗口reshape为行向量或者列向量。

池化层:池化层每次对输入数据的一个固定形状窗口(又称池化窗口)中的元素计算输出。池化层直接计算池化窗口内元素的最大值或者平均值。该运算也分别叫做最大池化或平均池化。

池化窗口形状为\ :math:2\times 2\ 的最大池化 池化层的一个主要作用是缓解卷积层对位置的过度敏感性。(把数组按局部矩阵最大值或者局部矩阵平均值缩小)

分析池化层的计算复杂度。假设输入形状为c×h×w,我们使用形状为ph×pw的池化窗口,而且使用(ph,pw)填充和(sh,sw)步幅。这个池化层的前向计算复杂度有多大?

答:时间复杂度为

c × ⌊ ( h − k h + p h + s h ) / s h ⌋ × ⌊ ( w − k w + p w + s w ) / s w ⌋ × ( m a x 的时间 ) c×⌊(h−k_h+p_h+s_h)/s_h⌋×⌊(w−k_w+p_w+s_w)/s_w⌋×(max的时间) c × ⌊( h − k h + p h + s h ) / s h ⌋ × ⌊( w − k w + p w + s w ) / s w ⌋ × ( ma x 的时间 ) 想一想,最大池化层和平均池化层在作用上可能有哪些区别?

答:平均池化可以提取背景信息,减少冲击失真,模糊,平滑。最大池化可以提取特征纹理,增强图片亮度

觉得最小池化层这个想法有没有意义?

答:因为像素值为正数决定的,0代表为黑色。采用最小池化很可能全部都是0。最小池化甚至会让你的神经网络轻易过拟合甚至无法训练。

卷积层块里的基本单位是卷积层后接最大池化层 :卷积层用来识别图像里的空间模式,如线条和物体局部,之后的最大池化层则用来降低卷积层对位置的敏感性。卷积层块由两个这样的基本单位重复堆叠构成。

LeNet交替使用卷积层和最大池化层后接全连接层来进行图像分类。

尝试基于LeNet构造更复杂的网络来提高分类准确率。例如,调整卷积窗口大小、输出通道数、激活函数和全连接层输出个数。在优化方面,可以尝试使用不同的学习率、初始化方法以及增加迭代周期。

答:1.换成relu激活函数显著提高。2.第二个卷积层的通道可以适当减少。神经网络定义如上,其他超参数如下。

LeNet的优缺点:小数据集成绩不错,但在大数据集上表现不尽人意。

尝试增加迭代周期。跟LeNet的结果相比,AlexNet的结果有什么区别?为什么?

答:AlexNet的训练时间长了三倍,训练结果精确度提高了10%左右。AlexNet精确率提高的慢一点。应该是用了dropout以及lr比较低的原因。同时神经网络复杂度大大提高。

AlexNet对Fashion-MNIST数据集来说可能过于复杂。试着简化模型来使训练更快,同时保证准确率不明显下降。

答:看5.5章我的笔记中的改进后的LeNet。个人以为,改为relu激活就能显著提高精确率了,对于Fashion-MNIST而言。

修改批量大小,观察准确率和内存或显存的变化。

答:批量改成256,准确率降低。显存没有看出显著变化。可能观测方法有问题,只是通过任务管理器的性能来查看。

VGG块的组成规律是:连续使用数个相同的填充为1、窗口形状为3×3的卷积层后接上一个步幅为2、窗口形状为2×2的最大池化层。卷积层保持输入的高和宽不变,而池化层则对其减半。

与AlexNet相比,VGG通常计算慢很多,也需要更多的内存或显存。试分析原因。

答:通道更多,刚开始时候的宽高更大。

尝试将Fashion-MNIST中图像的高和宽由224改为96。这在实验中有哪些影响?

答:时间缩短到了1/4,精确率略低于224的。

参考VGG论文里的表1来构造VGG其他常用模型,如VGG-16和VGG-19 [1]。

下图是AlexNet和VGG的网络结构局部和NiN的网络结构局部的对比:

左图是AlexNet和VGG的网络结构局部,右图是NiN的网络结构局部 1×1卷积层。它可以看成全连接层。其中空间维度(高和宽)上的每个元素相当于样本,通道相当于特征。

调节超参数,提高分类准确率。

答:1.通过提高迭代次数可以提高准确率。2.降低dropout的值至0.3能有效提高准确率。

batch_size过大会内存溢出。lr降低或提高均无显著效果。

为什么NiN块里要有两个1×1卷积层?去除其中的一个,观察并分析实验现象。

答:第一个1x1卷积层实现feature map的提取,第二个1x1卷积层进行feature map的组合

现象:1.时间降低。因为模型复杂度降低。2.第二轮的精确率下降了。3.最终精确率低。

原因可能是少了一个卷积层导致了feature map没有组合在一起,从而缺少了一部分的特征。直接通过feature map来回归参数。

feature map:在每个卷积层,数据都是以三维形式存在的。你可以把它看成许多个二维图片叠在一起,其中每一个称为一个feature map。

前3条线路使用窗口大小分别是1×1、3×3和5×5的卷积层来抽取不同空间尺寸下的信息,其中中间2个线路会对输入先做1×1卷积来减少输入通道数,以降低模型复杂度。

第四条线路则使用3×3最大池化层,后接1×1卷积层来改变通道数。

4条线路都使用了合适的填充来使输入与输出的高和宽一致 。

最后我们将每条线路的输出在通道维上连结,并输入接下来的层中去。

对比AlexNet、VGG和NiN、GoogLeNet的模型参数尺寸。为什么后两个网络可以显著减小模型参数尺寸?

NiN:去掉了AlexNet最后的3个全连接层,取而代之地,NiN使用了输出通道数等于标签类别数的NiN块,然后使用全局平均池化层对每个通道中所有元素求平均并直接用于分类。NiN的这个设计的好处是可以显著减小模型参数尺寸,从而缓解过拟合。

GoogLeNet:其中中间2个线路会对输入先做1×1卷积来减少输入通道数,以降低模型复杂度。另外,Inception块中可以自定义的超参数是每个层的输出通道数,我们以此来控制模型复杂度。

第一步:首先,对小批量BB求均值和方差,其中平方计算是按元素求平方。

第二步:接下来,使用按元素开方和按元素除法对x(i)标准化,ϵ>0是一个很小的常数,保证分母大于0。

第三步:引入两个可学习参数,拉伸(scale)参数 γ和偏移(shift)参数 β,如果批量归一化无益,学出的模型可以不使用批量归一化。两个参数和x(i)形状相同为d维向量。它们与x(i)分别做按元素乘法(符号⊙)和加法计算:

**卷积层的批量归一化:**批量归一化发生在卷积计算之后、应用激活函数之前。如果卷积计算输出多个通道,我们需要对这些通道的输出分别做批量归一化,且每个通道都拥有独立的拉伸和偏移参数,并均为标量。

**预测时的批量归一化:**训练时,我们可以将批量大小设得大一点。预测时,一种常用的方法是通过移动平均估算整个训练数据集的样本均值和方差,并在预测时使用它们得到确定的输出。ps:移动平均法arrow-up-right

在模型训练时,批量归一化利用小批量上的均值和标准差,不断调整神经网络的中间输出,从而使整个神经网络在各层的中间输出的数值更稳定。

批量归一化层和丢弃层一样,在训练模式和预测模式的计算结果是不一样的。

能否将批量归一化前的全连接层或卷积层中的偏差参数去掉?为什么?(提示:回忆批量归一化中标准化的定义。)

答:不行,为了使得均值为0、标准差为1。

尝试将批量归一化层插入LeNet的其他地方,观察并分析结果的变化。

答:将批量归一化插入到激活函数之后,跟训练集的拟合效果更好了。先通过激活函数过滤了一些数据,所以拟合效果更好了。batchnorm主要是让收敛变快,但对acc影响不大。

尝试一下不学习拉伸参数gamma和偏移参数beta(构造的时候加入参数grad_req='null'来避免计算梯度),观察并分析结果。

答:结果acc略低于原来的1%左右,因为归一化不一定有益,需要通过学习拉伸参数和偏移参数来使其达到最佳的程度。

查看BatchNorm类的文档来了解更多使用方法,例如,如何在训练时使用基于全局平均的均值和方差。

答:nn.BatchNorm(use_global_stats=True)可以设置使用全局。

设输入为x。假设图中最上方激活函数输入的理想映射为f(x)。

右图虚线框中的部分需要拟合出有关恒等映射的残差映射f(x)−x

残差块通过跨层的数据通道从而能够训练出有效的深度神经网络。

参考ResNet论文的表1来实现不同版本的ResNet [1]。

对于比较深的网络, ResNet论文中介绍了一个“瓶颈”架构来降低模型复杂度。尝试实现它 [1]。

答:”For each residual function F, we use a stack of 3 layers instead of 2 (Fig. 5). The three layers are 1×1, 3×3, and 1×1 convolutions, where the 1×1 layers are responsible for reducing and then increasing (restoring) dimensions, leaving the 3×3 layer a bottleneck with smaller input/output dimensions.“

未实现,思路是:修改resnet_block函数设置1×1, 3×3, and 1×1 的三个卷积层。

在ResNet的后续版本里,作者将残差块里的“卷积、批量归一化和激活”结构改成了“批量归一化、激活和卷积”,实现这个改进([2],图1)。

DenseNet论文中提到的一个优点是模型参数比ResNet的更小,这是为什么?

答:ResNet里通过步幅为2的残差块在每个模块之间减小高和宽。DenseNet使用过渡层来减半高和宽,并减半通道数。

DenseNet被人诟病的一个问题是内存或显存消耗过多。真的会这样吗?可以把输入形状换成224×224,来看看实际的消耗。

答:cudaMalloc failed: out of memory

实现DenseNet论文中的表1提出的不同版本的DenseNet [1]。

答:

若输入矩阵 : n h × n w 且卷积核 : k h × k w 则输出形状 : ( n h − k h + 1 ) × ( n w − k w + 1 ) 若输入矩阵:n_h\times n_w\\ 且卷积核:k_h\times k_w\\ 则输出形状:(n_h-k_h+1) \times (n_w-k_w+1) 若输入矩阵 : n h × n w 且卷积核 : k h × k w 则输出形状 : ( n h − k h + 1 ) × ( n w − k w + 1 ) 若高填充 p h 行,宽填充 p w 行,则输出形状 ( n h − k h + p h + 1 ) × ( n w − k w + p w + 1 ) 可以设置 p h = k h − 1 , p w = k w − 1 使得输入和输出形状一致。 若高填充p_h行,宽填充p_w行,则输出形状(n_h-k_h+p_h+1)\times(n_w-k_w+p_w+1)\\ 可以设置p_h=k_h-1,p_w=k_w-1使得输入和输出形状一致。 若高填充 p h 行,宽填充 p w 行,则输出形状 ( n h − k h + p h + 1 ) × ( n w − k w + p w + 1 ) 可以设置 p h = k h − 1 , p w = k w − 1 使得输入和输出形状一致。 当高上步幅为 s h ,宽上步幅为 s w 时,输出形状为 : ⌊ ( n h − k h + p h + s h ) / s h ⌋ × ⌊ ( n w − k w + p w + s w ) / s w ⌋ 如果设置 p h = k h − 1 和 p w = k w − 1 ,那么输出形状将简化为 : ⌊ ( n h + s h − 1 ) / s h ⌋ × ⌊ ( n w + s w − 1 ) / s w ⌋ 更进一步,如果输入的高和宽能分别被高和宽上的步幅整除,那么输出形状将是 ( n h / s h ) × ( n w / s w ) 。 当高上步幅为s_h ,宽上步幅为s_w 时,输出形状为:\\ \lfloor(n_h-k_h+p_h+s_h)/s_h\rfloor \times \lfloor(n_w-k_w+p_w+s_w)/s_w\rfloor\\如果设置 p_h=k_h−1 和 p_w=k_w−1 ,那么输出形状将简化为 :\\⌊(n_h+s_h−1)/s_h⌋×⌊(n_w+s_w−1)/s_w⌋ \\ 更进一步,如果输入的高和宽能分别被高和宽上的步幅整除,那么输出形状将是 (n_h/s_h)×(n_w/s_w) 。 当高上步幅为 s h ,宽上步幅为 s w 时,输出形状为 : ⌊( n h − k h + p h + s h ) / s h ⌋ × ⌊( n w − k w + p w + s w ) / s w ⌋ 如果设置 p h = k h − 1 和 p w = k w − 1 ,那么输出形状将简化为 : ⌊( n h + s h − 1 ) / s h ⌋ × ⌊( n w + s w − 1 ) / s w ⌋ 更进一步,如果输入的高和宽能分别被高和宽上的步幅整除,那么输出形状将是 ( n h / s h ) × ( n w / s w ) 。

μ B ← 1 m ∑ i = 1 m x ( i ) , σ B 2 ← 1 m ∑ i = 1 m ( x ( i ) − μ B ) 2 , \boldsymbol{\mu}_\mathcal{B} \leftarrow \frac{1}{m}\sum_{i = 1}^{m} \boldsymbol{x}^{(i)},\\\boldsymbol{\sigma}_\mathcal{B}^2 \leftarrow \frac{1}{m} \sum_{i=1}^{m}(\boldsymbol{x}^{(i)} - \boldsymbol{\mu}_\mathcal{B})^2, μ B ← m 1 i = 1 ∑ m x ( i ) , σ B 2 ← m 1 i = 1 ∑ m ( x ( i ) − μ B ) 2 , x ^ ( i ) ← x ( i ) − μ B σ B 2 + ϵ , \hat{\boldsymbol{x}}^{(i)} \leftarrow \frac{\boldsymbol{x}^{(i)} - \boldsymbol{\mu}_\mathcal{B}}{\sqrt{\boldsymbol{\sigma}_\mathcal{B}^2 + \epsilon}}, x ^ ( i ) ← σ B 2 + ϵ x ( i ) − μ B , y ( i ) ← γ ⊙ x ^ ( i ) + β . {\boldsymbol{y}}^{(i)} \leftarrow \boldsymbol{\gamma} \odot \hat{\boldsymbol{x}}^{(i)} + \boldsymbol{\beta}. y ( i ) ← γ ⊙ x ^ ( i ) + β .

当函数的海森矩阵在梯度为0的位置上的特征值全为正时,该函数得到局部最小值。

当函数的海森矩阵在梯度为0的位置上的特征值全为负时,该函数得到局部最大值。

当函数的海森矩阵在梯度为0的位置上的特征值有正有负时,该函数得到鞍点。

对于k维参数,第一种的概率是0.5^k。显然鞍点的概率大。

低维空间的非凸优化问题:主要是存在一些局部最优点。采用梯度下降方法时,不合适的参数初始化会导致陷入局部最优点,因此主要的难点是如何选择初始化参数和逃离局部最优点。 高维空间中非凸优化的难点:并不在于如何逃离局部最优点,而是如何逃离鞍点。鞍点(saddle point)是梯度为0,但是在一些维度上是最高点,在另一些维度上是最低点。

对于深度学习中的优化问题,你还能想到哪些其他的挑战?

答:学习率的调整,如何选择初始化参数。

numpy.meshgrid()——生成网格点坐标矩阵

举例:X轴可以取三个值1,2,3, Y轴可以取三个值7,8, 请问可以获得多少个点的坐标?

学习率过大或过小都有问题。一个合适的学习率通常是需要通过多次实验找到的。

当训练数据集的样本较多时,梯度下降每次迭代的计算开销较大O(n),因而随机梯度下降通常更受青睐O(1)。理解:梯度下降需要梯度求和后取平均,随机梯度下降只采样一个来计算梯度。

使用一个不同的目标函数,观察梯度下降和随机梯度下降中自变量的迭代轨迹。

答:梯度下降比较线性,随机梯度下降比较曲折。

在二维梯度下降的实验中尝试使用不同的学习率,观察并分析实验现象

答:小于0.5的还可以用,超过的无法收敛。学习率偏低会导致无法接近0点,稍高会在0点附近徘徊,过高则不收敛。猜测过高导致了接近指数爆炸的效果。

小批量随机梯度下降中每次迭代的计算开销为O(|B|)。

当批量大小等于训练数据样本数时,该算法即梯度下降。

修改批量大小和学习率,观察目标函数值的下降速度和每个迭代周期的耗时。

答:批量小,每个迭代周期耗时长,下降速度快。近似随机梯度下降。

学习率提高,梯度下降速度越快,每个迭代周期耗时不影响。

查阅MXNet文档,使用Trainer类的set_learning_rate函数,令小批量随机梯度下降的学习率每过一个迭代周期减小到原值的1/10。

答:在迭代周期的循环中添加如下代码。

换句话说,相比于小批量随机梯度下降,动量法在每个时间步的自变量更新量近似于将前者对应的最近1/(1−γ)个时间步 的更新量做了指数加权移动平均后再除以1−γ。所以,在动量法中,自变量在各个方向上的移动幅度不仅取决于当前梯度,还取决于过去的各个梯度在各个方向上是否一致。这样,我们就可以使用较大的学习率,从而使自变量向最优解更快移动

动量γ=0.5,则是1/(1-γ)=2倍小批量梯度下降。

动量γ=0.9,则是1/(1-γ)=10倍小批量梯度下降。

后者是前者五倍,直觉上,后者学习率应该是前者1/5.

使用其他动量超参数和学习率的组合,观察并分析实验结果。

答:momentum越小,结果越不陡。原因:动量越小,相当于越小批量的梯度下降,所以损失降低慢。其他组合改变如上述笔记。

AdaGrad算法,它根据自变量在每个维度的梯度值的大小来调整各个维度上的学习率,从而避免统一的学习率难以适应所有维度的问题。

AdaGrad算法在迭代过程中不断调整学习率,并让目标函数自变量中每个元素都分别拥有自己的学习率。

使用AdaGrad算法时,自变量中每个元素的学习率在迭代过程中一直在降低(或不变)。(AdaGrad算法在迭代后期由于学习率过小,可能较难找到一个有用的解。)

在介绍AdaGrad算法的特点时,我们提到了它可能存在的问题。你能想到什么办法来解决这个问题?

答:AdaGrad算法在迭代后期由于学习率过小,可能较难找到一个有用的解。解决办法:学习率降低的慢一点?指数加权移动平均。

在实验中尝试使用其他的初始学习率,结果有什么变化?

答:损失函数变得曲折了起来。

MSProp算法使用了小批量随机梯度按元素平方的指数加权移动平均来调整学习率。改进了AdaGrad算法。

因为RMSProp算法的状态变量s_t是对平方项g_t⊙g_t的指数加权移动平均,所以可以看作最近1/(1−γ)个时间步的小批量随机梯度平方项的加权平均。

在同样的学习率下,RMSProp算法可以更快逼近最优解。

把γ的值设为1,实验结果有什么变化?为什么?

答:损失函数发散扩大,而后Maximum allowed size exceeded。即获得nan。因为一开始的s_t为0,一直没有增长。后面学习率η除以ε的开根号,所得的值,导致自变量降低很大。

试着使用其他的初始学习率和γ超参数的组合,观察并分析实验结果。

答:原来:初始学习率0.01 和γ超参数0.9

修改1:初始学习率0.01 和γ超参数0.99-------结果:损失函数比原来更快下降。因为s_t变小,所以学习率更容易变大。

修改2:初始学习率0.05 和γ超参数0.90-------结果:损失函数比原来更快下降,后期更曲折,有增有减。因为η变大,所以学习率更容易变大。后期由于学习率还是增大,所以变得曲折。

小批量随机梯度g_t按元素平方的指数加权移动平均变量s_t

状态变量Δx_t,在时间步0时被初始化为0。记录自变量变化量g′t按元素平方的指数加权移动平均。

AdaDelta算法没有学习率超参数,它通过使用有关自变量更新量平方的指数加权移动平均的项

调节AdaDelta算法中超参数ρ的值,观察实验结果。

答:有点类似学习率,增大就容易降低损失。但是直觉上觉得,比学习率更稳定可靠。

小批量随机梯度gt按元素平方 的指数加权移动平均变量st

注:之前AdaDelta算法是只有按元素平方的,而Adam是增加了没有平方的小批量随机梯度也做指数加权移动平均。

Adam算法在RMSProp算法的基础上对小批量随机梯度 也做了指数加权移动平均。

调节学习率,观察并分析实验结果。

答:高于0.03就效果不太好了,迭代后期产生了曲折变化。原因想不明白。目前来看,Adam的学习率应当低一点

有人说Adam算法是RMSProp算法与动量法的结合。想一想,这是为什么?

答:因为对小批量随机梯度做指数加权移动平均就是动量法。